Join CRob as he sits down with Ram Iyengar, OpenSSF’s India community representative, to explore the unique challenges and opportunities of promoting open source security in one of the world’s largest developer communities. Ram shares his journey from computer science professor to developer evangelist, discusses the launch of LF India, and reveals why getting developers excited about security tools remains one of his biggest challenges. From spicy food preferences to Star Trek vs. Star Wars debates, this episode offers both insights into global open source security efforts and a glimpse into the passionate community builders making it happen.

CRob (00:21)

Welcome, welcome, welcome to What’s in the SOSS, the OpenSSF’s podcast where I talk to amazing people that are doing incredibly interesting things with upstream open source security. Today, we have a real friend of the show, one of my teammates, Ram, who helps represent our India community. And I would like to hear Ram, could you maybe give us a little bit of an introduction to yourself for those members that may not know who you are and what you’re doing for us?

Ram Iyengar (00:50)

Thanks for having me on the show, Krobe. It’s such a pleasure to be a guest on a podcast that I’ve been very regular in listening to on several of the platforms.

CRob (01:02)

Yay!

Ram Iyengar (01:03)

So I’ve been working with the OpenSSF for a little over a year now. It’s been a wild ride in terms of learning a lot of things. And it’s been…Honestly fun to represent security in a part of the world that I imagine doesn’t take security very seriously. But I also realized that’s true of many parts of the world.

CRob (01:30)

You’re not alone.

Ram Iyengar (01:33)

Yeah. In a geography that’s known for application development and a lot of software getting written, getting built and an increasing number of open source contributions these days. It’s fun to hold the security placard and remind people about, hey, security is important. Hey, don’t forget about security. Hey, open source folks, you still need to secure your goods. So that’s really what I do. So evangelizing OpenSSF and a lot of the… open source security stuff in the India geo.

CRob (02:12)

Excellent. Well, let’s hear a little bit about your backstory. What is your open source origin story Ram?

Ram Iyengar (02:20)

So I was one of those people fortunate enough to work on open source since the start. And when I say start, my first real job was working on some open source content management systems at work. Android caught on big around the time I finished school. And then in terms of roles, I was born in India in the early 90s. So I guess I was born to be a developer, and write software, but also I went to school trained to be an engineer, but I always wanted to be an educator. So after my first few years of being a software developer, I switched roles to be a computer science teacher full time where I went to school in India. So I went to school in Boston.

Got a master’s in telecommunication, did a lot of Android related stuff. And then went back to India, started as a professor of computer science. But then what I realized was, I love being a teacher and an educator, but I also love the salary in the software industry.

CRob (03:40)

Right?

Ram Iyengar (03:41)

And so, and so, eventually I found my path into technology, evangelism and developer relations. And I found that, you know, software and tools and all of these don’t necessarily suffer from a lack of features as much as they do from a lack of education. And so to me, it was, you know, writing guides and doing trainings and giving talks and writing documentation and contributing a lot of the non-technical stuff, both for products that I work with and open source projects that I love. So, one thing led to another and now it’s been like five years of working with the Linux Foundation full time. And, you know, a good chunk of that with the OpenSSF.

CRob (04:33)

That’s awesome. Yeah, thank you for doing all that. I really agree about the importance of education. That is something that is crucial if we’re going to help solve our mission together, right?

Ram Iyengar (04:45)

Absolutely. I remember one of my earliest OpenSSF community day events and you were on stage talking about the diagrammers and the education working group and all of that and yeah, that’s played a huge part in stuff that I’ve been doing. So thank you too.

CRob (05:06)

Oh, pff. Proud to contribute to helping out. So I’d like you to tell me more about LF India and your work with engaging the community there. What’s it like collaborating with other folks in India?

Ram Iyengar (05:22)

So LF India was announced in December of 2024. We’ve been rolling out the first steps of, know, rather the first invisible and boring steps of any entity, is setting things up and getting some of those initial partnerships and conversations going. But all of that apart, I think thanks in big part to the great work that the LF has been doing all around.

It’s kind of marketed itself, to be honest. We have a whole raft of contributors who participate in a lot of LF initiatives already that are global, obviously. But we’re starting to realize certain flavors of sovereignties coming in, ideas that are specific to the region have to be focused on.

Ram Iyengar (06:19)

So LF India is sort of playing this role of replicating a lot of the good work that’s happening in other parts of the world, specifically for the India Geo. And in the past few months, we’ve had some good conversations from people about what’s potential in terms of projects that can come on, terms of initiatives that we can support, in terms of conversations that we can have in the public sector, in academia, and obviously in the big…organizations and private sector that we’re most used to. So there’s a lot of interest in participating in LF India forums now. And part of it is online events and things like that. And a big part of it is also offline events.

Big thanks to the CNCF and Kubernetes in stewarding a lot of these conversations.

It goes without saying that they’re probably one of the more active open source communities right now. And piggybacking on that success, think LF India is happy to announce the open source summit event that’s sort of its flagship that happens in different parts of the world. And it’s going to be sandwiched between the KubeCon in India and the OpenSSF Community Day in India as well which I’m really excited about.

CRob (07:44)

You’re gonna have a really busy time, huh?

Ram Iyengar (07:47)

Yeah. I mean, it’s all happening. The conversations are there, the partnerships are coming forth, the events are happening. And so I think it’s the whole package. it makes me extremely both proud and privileged to be part of the opening cohort that’s helping herald some of these new changes in this part of the world.

CRob (08:10)

That’s awesome. I know most Linux Foundation entities kind of operate similarly, where we’ll have a webpage and a GitHub repository and then some mailing lists and whatnot. So if someone was curious about whether they wanted to get engaged with either LF India or your direct work with the OpenSSF, how best can someone kind of find out more about you and like what’s going on with that part of the world?

Ram Iyengar (08:38)

So the goal at the moment is to drive more awareness of LF itself. So I guess, you know, just do the individual project website. So CNCF has its website and the Slack and all of these. The OpenSSF has the openssf.org website, the OpenSSF Slack. So get on all of these. I’m accessible through LinkedIn and other things if you wanted to reach out directly. And right now the focus is to get more people to become aware of the LF projects directly. And obviously there’s going to be like an LF India web page and things like that. Like I said, it’s one of those boring pieces that we’re still getting together.

CRob (09:23)

Now I remember that you were doing a series of videos. Could you maybe talk a little bit about that?

Ram Iyengar (09:30)

Mm-hmm, Yeah. Every once in a while, mostly at the frequency of like twice a month, or every fortnightly, I try and identify somebody who’s working in the security space and is based out of India. So they can give us like a picture of what it’s like to be doing security in this geography. You know, I’ve had the good fortune of meeting so many wonderful guests. And we do like a 45 minute session where they do like part of it is something of topical interest, like they’ll pick up an area that either they’re very happy to speak about or they feel that the community needs to be educated and energized about. And then a big chunk of it is also just an open conversation about here’s what I have encountered and help me validate these ideas or help me inform people about how important security is, and especially when they’re working with open source and things like that. So I’ve had like 15, 20 guests up to now and they’re all recorded and available on YouTube. I usually stream them live and then thanks to technology, they’re available for consumption as a long tail for people. And these are on the OpenSSF YouTube channel. So those who are interested in catching any of these episodes in retrospect, you’re welcome to visit the OpenSSF YouTube channel. And there’s also always something that’s going to be up and coming. So if you subscribe to the channel, you can stay updated about what’s coming.

CRob (11:16)

Excellent. Yeah, I’ve really enjoyed some of your interviews over the last year or so. Top notch stuff. Thank you for doing that.

Ram Iyengar (11:23)

Sure. I mean, some of them are, you know, deeply technical, like runtime security, for example, and some of them have been more about how to build a security culture within an organization and what are the missing pieces in security that entry level developers should know and things like that, you know, so stuff that, you know, I feel will strike a good balance. And it’s been wonderful just discovering all this talent that’s always been around and I’ve never looked for security people before, but it’s amazing to see what comes up.

CRob (12:00)

That’s amazing. Now, I love the security community and especially the open source security community. Great folks. I love the fact that everyone’s so willing to kind of share whether they’re educating or kind of bringing a topic that they want to have a conversation about. I love that.

CRob (12:15)

Let’s move on to the rapid fire part of the show. you ready for rapid rapid rapid fire?

Ram Iyengar (12:22)

Ooh, I am.

CRob (12:23)

I have a bunch of silly questions. I just want to hear your first response off the top of your head. We’ll start off easy, mild or spicy food, sir.

Ram Iyengar (12:34)

Spicy.

CRob (12:37)

Oooh that’s spicy. I love spicy food too, although I’m not sure I could hang with you. I do my best.

Ram Iyengar (12:45)

Yeah, sure. I think spicy means something completely different in this part of the world.

CRob (12:51)

Like a different stratosphere. I have mad respect. Uh, VI or Emacs.

Ram Iyengar (12:57)

Oh, I’m a VI person, always happy.

CRob (13:03)

Excellent, excellent. Who’s your favorite open source mascot?

Ram Iyengar (13:06)

I like the Tecton mascot a lot. Closely, but obviously like the tux is a classic, for the recent ones, Tecton has been my favorite. Although, you know, honk, I think deserves a special mention.

CRob (13:24)

We all love honk. Excellent. What’s your favorite vegetable?

Ram Iyengar (13:32)

I love the versatility of an eggplant. Can do a lot with it. Yeah. Yeah.

CRob (13:38)

Yum. I love eggplant parmesan. That’s a delicious choice. And finally, and most importantly, Star Trek or Star Wars?

Ram Iyengar (13:47)

Star Trek Crob.

CRob (13:50)

Hahahaha, There are no wrong answers, but yes, that’s an excellent one.

Ram Iyengar (13:54)

Yeah sure. But also like fun fact, I don’t know if this might get me in trouble, I have never watched any one of the Star Wars movies.

CRob (14:00)

WHAT?!

Ram Iyengar (14:01)

Yes. Yeah. This might alienate a lot of people or help me make new friends but yeah.

CRob (14:11)

[Sad Trombone] Well, I would encourage you to go watch there are many options in the Star Wars universe, but Star Trek is pretty awesome.

Ram Iyengar (14:19)

It is

CRob (14:21)

Well, thank you for sharing a little bit of insight about yourself as we wind down Do you have a call to action or something? You want to you know, ask our audience to maybe look into or do?

Ram Iyengar (14:32)

It’s hard in the region that is India to get people to focus on security, let alone like, especially when they’re working on open source stuff. Even if you look at a lot of the recent AI trends, for example, there’s a bunch of people who are focused on AI agents and MCP and whatever new technology is going to come in a couple of days from now, you’ll find like 15 examples of people developing something, but you don’t see the same kind of enthusiasm around applying security tools. Even for like the container ecosystem, everybody was in on like cloud native. And then when you talk about, did you scan that container as you as you run a build, people are like,

“Why would I even think of doing that?” So it’s a hard problem. And when you have what some of by some of these estimates is going to be the largest developer population in the world or some crazy stuff like that, you really need to help them focus on security and educate them about secure apps are also good quality apps.

There was a lot of cloud-native development and blockchain development and AI development and all of these, but not enough emphasis on the security side of stuff. At the same time, that’s what the OpenSSF is here to help you about. Get a leg up on security stuff. Take a look at the projects and the working groups. It might really be worth your time. And so, let’s come together, help build an informed and educated security community around the wonderful app development community that we already have. so, you know, engage with the OpenSSF, engage with the Linux Foundation, whether it’s through events or meetups or, you know, just read through some of what the working groups are putting out and participate on Slack and throw in a comment or two on social media and just tiny things if you can. It goes a long way in helping open source move forward and build momentum. So if you can do any of those, I’d really be happy.

CRob (17:01)

some great advice and no matter where you live, there’s a ton of great content and please share with your communities. So, Ram, thank you for taking time today. I know you’re gonna be busy with that whole series of events, especially the Open Source Community Day in India, which will be pretty fun. Our second one, correct?

Ram Iyengar (17:23)

That’s right. So first one was in 2024, second one in 2025. I love how there’s a balance of a Linux security talk, security culture talk, some AI security stuff, some container security stuff. And I’m really grateful to the community to have come forward and submitted all these wonderful talks.

CRob (17:48)

Well, thank you for helping lead the community and helping educate them. And thank you for everything you do for us here at the OpenSSF.

Ram Iyengar (17:56)

My absolute pleasure, CRob. Thank you so much for all of that and having me on the show.

CRob (18:01)

You’re very welcome. And to all of our listeners, that’s a wrap. Happy open sourcing.

In this episode of What’s in the SOSS?, host Yesenia Yser sits down with open source security engineer and community leader Tabatha DiDomenico for an inspiring conversation about her unexpected path into open source, the vibrant communities behind security, and her role as president of BSides Orlando.

From discovering Netscape in the early days to shaping security strategy at G-Research and OpenSSF, Tabatha shares how her career evolved from necessity to purpose. She talks about the power of DevRel, the invisible work behind sustainable open source, and the magic of volunteering – pro-tip: working the registration table is great for networking.

Whether you’re new to the ecosystem or a seasoned contributor, this episode is packed with insight, warmth, and practical advice on getting involved and staying connected.

Topics Covered:

00:00 The Journey into Open Source

06:10 Current Projects and Roles in Open Source

11:57 Involvement with B-Sides Orlando

18:07 Understanding Developer Relations in Open Source

27:08 Rapid Fire Questions and Final Thoughts

Intro music (00:00)

Tabatha (00:04)

I immediately felt at ease. And I was like, oh gosh, people think, just like me, they, you know, they are curious. They want to break things, they want to put things back together again, and they’re just so generous with their time.

Yesenia (00:18)

Hello and welcome to What’s in the SOSS? OpenSSF’s podcast where we are talking to interesting people through the open source ecosystem. My name is Yesenia Yser. I’m one of your hosts and today we have an incredible treat. I’m talking to a close colleague and an open source extraordinaire, Tabatha DiDomenico, a security engineer that works on our open source. Welcome Tabatha. Welcome to introduce yourself to the audience.

Tabatha (00:47)

So thank you so much for having me today. My name is Tabitha DiDomenico. I am an open source security engineer at G-Research. And it’s been exciting to be a part of OpenSSF in various working groups and capacities over the past couple of years.

Yesenia (01:04)

Welcome. So glad to have you and we’ll start off with one of my favorite questions. Can you tell us about your journey in open source? What sparked your interest and just how has it grown over time?

Tabatha (01:14)

So this is an interesting question. feel like when I reflect on my journey in open source, it doesn’t quite look like a journey because it was not an intentional thing. When I first began using open source, it was out of necessity. It’s what was available, probably thinking back to Netscape days. And that’s probably my first actual awareness that something was an open source project. A lot of the work that I did at the time, the organizations that I was with, the products that we used internally to power various organizations, we selected them because they were free and happened to be open source. when I think back to how has it been a journey over time, it’s become more intentional. My interest in open source has definitely become an intentional direction that I have set for my career.

You know, when I think back to those early days and using open source out of necessity rather than a desire to be, give back or to be part of something larger than myself or, and there was none of those sort of in intrinsic, lovely motivators that we had. was really just out of necessity. and over time I was fortunate enough to, to be in a position to work with WordPress. and that was sort of the next evolution of, of my engagement in open source. I had built a small agency for myself during WordPress development, website development, and also maintenance, and just getting familiar with the community and the resources that were available. It was not something that I had ever seen from any commercial software that I had been a part of. The large corporations don’t necessarily build these beautiful communities around their paid products. Some do.

But it’s incredibly rare, right? And so when I’d seen this, you know, that there was these word camps and that there was these hyper local conferences and events that people came together because of love for the product, love for the community, that was really compelling to me. From there, I had the incredible opportunity to actually get paid to work on open source through a product called the Dradis Framework, which pen testers in the security community may be familiar with, because it’s an open source penetration test writing tool – where it kind of got at start. The founder of the company is originally a pen tester, wrote this tool in-house. All of the other pen testers began using it. it was one of those products that once he open sourced it, the community thought, wow, this is really great. You’ve got something incredible here. Other people begin using it. It sort of became the case of, you know, if you build it, they will come, your users will come, but then the problem began of, how can you support this product in your spare time and still have a life? know, so that’s when, when he began to look into, you know, releasing it as a commercial product as well. and so that, you know, seeing the, that how community can build around open source and having a hand and starting to shape a community around a product and build a community around a paid version of a product, it further expanded my understanding of how open source can work and how open source can work in business. And then, and now I’m here with G-Research and working with organizations like the Linux Foundation and OpenSSF, going to events like FOSDEM and seeing the scale of open source and, you know, in our world and, and knowing that I I’ve involved somehow, it feels really cool. So, you know, now it’s definitely intentional. get paid to work in open source. it doesn’t necessarily look like me just, you know, writing PRs and pushing them all day long. Cause my work looks different. and that’s great. Cause it’s needed. Yeah. I’m not sure what else to add to that except for it’s been an incredible opportunity to witness the scale of open source and to get an understanding of the breadth of it. It’s fascinating to me and a lot of the challenges that we face in security around open source are complex and not easily solved and I like those kind of problems.

Yesenia (05:53)

Yeah, and just like you said, the scale of it just from, I think my first open source conference to like the latest, like just the number of tendons and people that are aware of them. It’s really great to see in the community. you know, thank you for your contributions and impact to make that happen. With that, I know you just mentioned earlier that you’re starting, you know, a new role. So I’d love for you to share any projects you’re currently working on and just what excites you the most about it.

Tabatha (06:22)

So a lot of my role, a lot of the work in my organization is, I feel like more of like an ecologist than anything else, an open source ecologist. How do I, while my title is open source security engineer, a lot of the work that I do is to support and be good, help our organization be good stewards of the open source projects that are important to us or that we value in some way. And so how do I speak for an open source project in their community and ensure that how we’re interacting with that community is appropriate, that our vision aligns with the vision of the community itself and the direction of the product of the open source component and how do I, know, how can I best connect our internal resources with projects that I see could benefit by that support is sort of the crux of my work and to making it, how do we responsibly and securely contribute and participate in open source ecosystems? It is, it is. And especially if you have a culture and an organization that’s not necessarily

Yesenia (07:42)

It’s big challenge in scenarios today.

Tabatha (07:45)

the most familiar with working in an open source way. So some of our recent projects have been, you know, looking at, you know, perhaps an inner source initiative and getting our starts start there and, and encouraging folks that have never contributed to an open source project before a bit of confidence in working and collaborating with others in an open source way internally before they take that next step and start thinking about pushing things upstream.

Yesenia (08:20)

Yeah, because it’s interesting because it’s a whole different culture when you’re going from internal into an external phase. so building that culture inside to then take it out, I think is a smart way and approach to do it. Yeah.

Tabatha (08:34)

Yeah, yeah. So that’s been, that’s been one of the very fun project to work on and just like I said, connecting folks with projects and solutions that I believe will solve the challenges they’re having or can help point them in a better direction to solve the challenges that they’re having.

Yesenia (08:53)

Yeah, and outside of that, it just sounds like you do a lot for open source, but you know folks like us we just add more hats to ourselves. You are the president of BSides Orlando. It was a great conference. Definitely attended last last year’s and I’m sure you are preparing for this year. How did you get it? You have to your head. How did you get involved with the organization and what’s next on your agenda for that like?

Tabatha (09:21)

So this is a fun story and speaks to more how I really embraced that I was working in security already without it being so much of a title as I was invited to attend Security BSides Orlando 2014. And just to back up for our audience here, that may not be familiar with the Security BSides framework. It was born, I believe, in 2011. And it comes from the desire to elevate additional voices, to get folks involved in participating in information security, and to create space for newcomers and to bring smaller, have smaller events that are more local to a community, to bring speakers in to that community. I’m not explaining it well. I’d like to probably try that again. So the BSides security BSides framework started in around 2011 and it was a group of individuals that recognized that there was a number of speakers that kept returning to the stages of the larger security conferences. And so they looked to have a BSides version of those larger security conferences that was organized by the community that brought people in and speakers and information in that the local community wanted to hear or needed to hear by the, by the judgment of the organizers, and has grown from there. It is not an official organization that’s like run globally. There’s no, you know, contract that we have to sign. don’t pay dues up to any sort of umbrella organization. It’s a, while there is an organization in, know, that’s registered as Security BSides each Security BSides event. And I believe the last count or last look.

I looked at it was over 200 events annually around the world is organized by the community in that area. So it’s, it makes it it’s a community’s conference is how I think about it and how I discuss it when we’re, when we’re talking about planning security besides Orlando. So as I was, I was getting back to wanting to share was my story is how I got involved.

I was invited to attend. A friend of mine had been telling me for a while that I was doing security, that I should consider looking into security as a career change for myself and to maybe go that direction. And if nothing else, that I would enjoy the community. So I attended BSides Orlando 2014. And I’ve shared this story on stage a couple of times. I picked my first lock at that conference and it’s like the lockpicking village to information security job pipeline just took hold. But it was more than that. It was more than just picking lock. It was the willingness of the other attendees and the organizers to share information. It was, I’m getting chills now thinking about it.

Yesenia (12:01)

You got me chills. like, I’m gonna, I am like,

Tabatha (12:03)

That’s how I felt as I walked in and I was greeted by John Singer Who who I don’t know if you know John Singer But he it feels like everybody knows him at least yeah, especially if you’re in the Florida cyber security world It’s hard not to know John Singer but either he was just so welcoming and here’s this guy who was organized this this huge conference in this area and and my first interaction with him was nothing but just welcoming and it’s so, it can be so scary when you’re walking into a new environment like that, a new space, even if you have somebody encouraging you to be there and with you. but I immediately felt that ease and I was like, my gosh, these people think just like me, they, know, they, they are curious. They want to break things. They want to things back together again. And they’re just so generous with their time with the goal of.

Yesenia (12:57)

Mm-hmm. helping others.

Tabatha (12:58)

Helping others, yeah. mean, there’s no, yeah, hacking is, you know, that’s cool and it’s a cool thing to be involved in, but it’s more than that. know, for the folks that I’m drawn to and for the communities that I’m drawn to, there is this sense of, yes, I need to do this work and also I’m doing this work because it is meaningful to me and I recognize that this meaningful work, despite our differences, is bettering your life too. And I think that’s great.

Yesenia (13:31)

Yeah, it’s one of my favorite things about the security communities. You one of the first communities I got involved in was security and everyone was so welcoming that I was just, I was always applauded when they’re like, you know, they knew all the negatives in the space. And I was like, really? Everyone’s been so welcoming and nice and the tech community and open source like that. So I definitely resonate with that. And lockpicking was one of the first things when I started security, they’re like, you can’t start.

You can’t start your first ticket until you lockpick this. So they gave me like a kit and like three different locks and levels and they’re like, all right, we’ll start you off though. So if anyone’s interested in security, you you got to pick your first lock. You got it.

Tabatha (14:20)

Yeah, I think that’s the, that’s, that’s the direct route I took all the time, but I, I’m sure that there’s others out there that have gotten their start in cybersecurity after, after picking their first lock at a BSides event, just like I did. but yeah, it’s, it’s, I know that, that it exists. know that toxic behavior exists in information security and obviously, know, in my time, you know, in the years that I have been involved, in, this industry I have seen the numbers improve with regards to diversity and folks being accountable for their actions and holding others in the community accountable for their actions. So I don’t want to discount that it can be a difficult place sometimes, both working in security and working in the open source world. But by and large, that has not been my experience. My experience has been more similar to yours, where most of the folks that I have engaged with have been more than happy to sit with me and explain a difficult concept or a new approach or most of the time they’re just excited to share whatever thing they’re nerding out about and yeah.

Yesenia (15:27)

That’s it, we just wanna geek out with one now. Like, my god, did you see this new cool thing? Let’s play with it.

Tabatha (15:33)

Yeah. Yeah. We figured out this new way of doing things. can enumerate blah, blah, blah faster. Like, let me show you to do. Okay, great. You know, um, or if I’ve got questions, they’re, they’re always more than, more than willing to, to jump in and help. Um, and from, yeah. So from there, uh, I, I think that was, like I said, it was 2014 later that year. went to my first DEF CON, which is a whole, uh, a whole thing. It’s a, and I found much the same thing. You know, I found, I found that.

The community was very welcoming and here’s all these people that, you know, have lived very different lives and have very different experiences from my own. And still we’re, we’re aiming to solve similar problems and working together seems like the best way to do that. that year, funnily enough, I had worked with others that had been working. So I went to hacker summer camp that year, that first time.

with others that were paid to do security. That was their job, right? I was still there on my own dime. And there’s a conference, there’s a couple of conferences earlier in the week. One of them is Black Hat, and Black Hat is, you know, the more corporate version of the security week, other security conferences out there. And I couldn’t afford to go. So I looked around and I was like, well, surely there has to be a BSides or something. So I looked at the BSides Las Vegas and they were still receiving volunteer applications. I applied and I volunteered that first year at BSides Las Vegas and I was hooked. That was all it took for me to just fall in love even further with the security community. And from there, it was a couple years before I could come back and get engaged. it was 29 BSides Orlando 2019. I came on as staff and I ran registration desk for the event that day. And night.

If you want to meet everybody at the conference that you are attending, I recommend volunteering to work at the registration desk, because that is a fast track networking opportunity. And from there, I became on board in 2020. And then I was nominated and elected to take over as the president of BSides Orlando. I think it was the next year. We were still not quite cleared from COVID to be able to have an on-site event.

But 2022, we returned on site and have been organizing an event annually since then. this year will be my fourth BSides Orlando event as the president. Yeah.

Yesenia (18:09)

Nice. Yeah, it was a great event. I had so much fun there. I did the badge soldering. I went to everything. Thank you for sharing that. was such a… And for those that… I know you had mentioned Hackers Summer Camp, just for those that aren’t aware, Hackers Summer Camp is a week long in Vegas where there’s multiple security conferences. You have Black Hat, BSides, Def Con, Squid Con.

Tabatha (18:33)

Dianna Initiative.

Yesenia (18:35)

And Diana initiative there, there might be others that pop up. I know hacker in heels. They have their own salon that kind of runs there too, for like women networking events in cybersecurity. So if you’re a security professional, those are. Yeah. Worth the money.

Tabatha (18:48)

So definitely check it out. There’s a lot of ways to get out there too if you don’t have the funds to attend and maybe we can share some of those resources at some point.

Yesenia (19:00)

Yeah, maybe in the description, we’ll figure that out. I know you just transitioned over to security engineer, but before that you were doing dev rel developer relationships. And this is kind of like a new space just over on the industry with the last couple of years. What role does dev rel play in open source ecosystem? just someone new that’s coming in, if this is something that interests them, how could they get started and start contributing meaningfully?

Tabatha (19:22)

That’s a great question. DevRel is a bit challenging to sort of define, because each organization does Developer Relations a little bit differently. I know for our organization it, it really, like I said before, it’s sort of acting as the ecologist between the open source ecosystems that we’re involved in, our internal communities and engineers and, you know, acting as sort of the steward between the two, for what that actually looks like in practice, it’s for my job. Up it was it is to be good stewards of the projects that we publish and to ensure that the work that we’re putting out in the world is as high quality as possible, that it makes it that, that the project is ready to receive users, contributors, even would be lovely for many of these projects, and those sorts of the sort of work that needs to be done to in court, encourage new adoption of a project, or to encourage new contributors, or to encourage an existing contributor, to consider Thinking about becoming maintainer and taking on additional responsibility. It requires somebody who’s not necessarily bogged down in doing triaging PRs and doing code reviews. It takes time away. It takes time to sit down and be thoughtful about how do we want to encourage contributions? Do we have a solid contributing guide? Do we have it? Do we make it clear how to get started with even an issue? Do we make it clear on how to be involved in this project? Advocacy for a project, if you recognize that there’s a project that needs just more awareness, like I said before, not all projects are like greatest where you build it, they will come oftentimes, projects you know, that are either a hobby project or something that’s new. It needs that, that awareness building you need. It’s difficult to stumble across a new project, sometimes, just because of the there’s so much out there, you know, how do you make heads or tails of it. So doing work for advocacy, doing work where I’m advocating for various frameworks, perhaps that like open SSF has established through something like s 2c 2f to understand how to best consume open source into your into your organization, advocating internally for using additional things like salsa, you know, and understanding the different different paths to Sally and how that could interplay in your organization. Or even, you know, going out and talking about Sally so other or people at that work at other organizations have knowledge of these various tools, frameworks and projects that are to are there to enable folks to do the work of building open source and being secure while doing while working in open source.

Yesenia (22:36)

Yeah, it’s awesome. I know OpenSSF has the DevRel community meeting that happens once a month. I think it’s a great call for folks that are interested to come in and see what the group is working on.

Tabatha (22:49)

Yeah. And there’s lots of opportunities. know, each of the, each of the working groups that OpenSSF has, there’s brilliant people working on solving really challenging problems. Once those problems are solved, technically there’s still is this, this bit of advocacy that needs to happen there. You you have to take that project and then promote it a bit to get more adopters because without feedback on how this actually works in practice, it’s, you know, it’s not always, you’re not getting the best product project or outcomes because the diversity of opinion is so low. And there’s many different ways to solve all of these problems. So the more of us that come together to share how this works in practice, the better we can make it for all of us.

Yesenia (23:31)

And test it too, I think you just got into a good point. Sometimes we just need people to use it and see, does the guide make sense? Like that was one of the things that hurt me the most when I would pull a new open source tool was the user guide. And I’m just like, they had all these dependencies installed and I had to figure out which ones to install. And I’m like, can we just add this? Like, what do I need installed before? If I got a brand new computer, what do I need? know, just to start. cool.

Tabatha (24:00)

Yeah, that’s one of those things. We work with major league hacking, MLH, and I have a Developer Relations fellow each semester. Yeah, great Org If your organization has the ability to get involved with MLH, I encourage you to do so. And if you’re listening to this and you’re a candidate to become an MLH fellow, I encourage you to do it. It’s been, every single one of the fellows that has come through our doors has been just top notch.

So that aside, it can be a challenge to introduce DevRel to somebody who’s young and excited about working in open source and they’re chomping at the bit to solve their first technical issue and get to coding, right? And then you have to break it to them. That’s not what we’re doing. We’re doing all of the other stuff, the in-between stuff that has to get done in order for people to actually use this.

Uh, and then, you know, they’re just kind of like, Oh, well that, that doesn’t sound nearly as interesting. And then I, I’ve, then I, you know, I kind of do this thing where I’m like, well, do you use any open source in your, know, in your own time and your hobby projects? Have you ever released anything? Do you, you know, have you ever gone and tried to play like an open source game? Is there anything that you’ve seen before? And sometimes they’ll come in and we’ll have, you know, definitely a very clear opinion about open source.

And sometimes they’ll come back and look at it, you it just kind of is the thing. But inevitably I always hear back that they have a greater appreciation for good documentation after having worked with us to do DevRel because they see the value in it now. They understand that it doesn’t just happen. There’s no just like running AI on it to generate, you know, quality documentation. Maybe somebody out there has a tool that does it brilliantly now.

But it’s unlikely. There’s always nuance to these things. So I think that exposure to DevRel creates a different sort of appreciation for the invisible work, the labor that has to go into open source in order for it to flourish and thrive and to give open source projects the best chance at success in the environments that they’re in.

Yesenia (26:16)

There’s so much behind it people just think it’s coding. I’m like no we can use so much more help Great let’s move on to the rapid-fire part of the interview I’m gonna shoot the questions first comes mine and we’ll keep flowing. So first question Star Wars or Star Trek?

Tabatha (26:21)

Star Wars.

Yesenia (26:30)

Early bird or night owl.

Tabatha (26:42)

Ooh, both, depends on the day.

Yesenia (26:45)

Okay, I’ll take it. get that. get that books or podcasts.

Tabatha (26:51)

I would say, see, I finished a master’s program a couple years ago and I’m still recovering from having to read all of that. That’s happened to every time I’ve gotten a degree. So I’m going to go podcasts, but normally in better, not, not graduate level brain still, it would be books. Yeah. Yes.

Yesenia (27:10)

Yeah, your brain burns out. I get that. Like, it’s just recent where I’ve been able to like pick up a book and like, pretty much become addicted to it. Like, I can’t do anything else until I’m done with the book. It’s great.

Tabatha (27:21)

That’s great. I miss being at that level with a new book. So hopefully soon.

Yesenia (27:27)

Took me years. I couldn’t pick up books, but I have a huge library. Last one, spicy or mild food.

Tabatha (27:33)

spicy, absolutely spicy. Yeah. Yeah. I grew up between, I grew up between Texas and South Florida. So it’s spicy all the way.

Yesenia (27:44)

You got it, best of both worlds. Well, thank you for your time. I want to give you space to leave any last minute advice, thoughts for the audience.

Tabatha (27:54)

I’d say any last minute advice or thoughts. I would say get involved. Don’t be afraid. It’s not as scary as it seems and show up in person if there’s an event near you. Excuse me. Let me try that again. So I think that.

Tabatha (28:39)

I think my final thoughts on this would be to get involved in the community because that’s really where I have found the most benefit for myself personally. Reach out, get an understanding of the project. If you’re curious about getting involved and you’re a little nervous to get started and are unsure, even if those good first issues look too scary to you, hop on a community call. If there’s a contributing call, just go and lurk. Attend something where you are engaging in other people engaging with other people and not only the code base because that’s really where you’re going to get more insights on how everything gets put together, how everything works, how the project works and how the community works together and whether or not you actually want to be a part of that community before you get involved. So I say jump in.

Yesenia (28:39)

Totally jump in and volunteer for events too. think that’s another great volunteer. Well, thank you for your leadership and contributions to our communities. You know, many thanks to our listeners and our open source contributors and the community of folks that help drive all of our projects forward. Tabatha, I appreciate your time today and I look forward to seeing all your impact in 2025. Thank you.

Tabatha (28:44)

Volunteer friends. Yeah, absolutely.

Tabatha (28:47)

Thank you, Yesenia, It was great chatting with you today.

A Framework That Works

Cybersecurity isn’t just the responsibility of a dedicated team anymore. Whether you’re an engineer, a product owner, or part of the executive suite, your day-to-day decisions have a direct impact on your organization’s security. That was the clear message from the expert panel featured in our webinar, Cybersecurity Skills: A Framework That Works — now available to watch on demand.

Leaders from IBM, Intel, Linux Foundation Education and the Open Source Security Foundation (OpenSSF) share real-world insights on how their organizations are tackling one of today’s biggest challenges: upskilling the entire workforce in security. The panelists discussed the new Cybersecurity Skills Framework, an open, flexible tool designed to help teams identify the right skills for the right roles — and actually get started improving them. It’s practical, customizable, and already helping global organizations raise their security posture.

In the webinar, you’ll hear how to:

The conversation is packed with actionable advice, whether you’re building a security training program or just want to understand where you or your team stands.

🎥Access the Cybersecurity Skills, Simplified Webinar

BONUS: Receive a 30% Discount for any Security-Related Course, Certification or Bundle Just for Watching

Need to Close the Skills Gap Across Your Team or Enterprise?

OpenSSF participated in the 2025 UN Open Source Week, a global gathering of participants hosted by the United Nations Office for Digital and Emerging Technologies, focused on harnessing open source innovation to achieve the Sustainable Development Goals (SDGs). Held in New York City, the event gathered technology leaders, policymakers, and open source advocates to address critical global challenges.

On June 20, OpenSSF joined a featured panel discussion during a community-led side event curated by RISE Research Institutes of Sweden, OpenForum Europe, and CURIOSS. The panel, titled “Securing the Supply Chain Through Global Collaboration,” explored how standardized practices and international cooperation enhance open source software security and align with emerging regulatory frameworks such as the EU Cyber Resilience Act (CRA).

Panelists included:

The session highlighted the critical need for international cooperation to secure global software systems effectively. Panelists discussed the emerging role of generative AI (GenAI) and its implications for open source security. The importance of developer education in how to develop secure software was also noted; as developers must increasingly review GenAI results, they will need more, not less, education.

“It was both a great opportunity to share the work of the Gen AI Security Project and insights on the challenges and benefits generative AI brings to our discussion on securing open source and the software supply chain,” said Scott Clinton.

“The United Nations brought together a global community where nations become collaborators rather than competitors,” added Arun Gupta. “It’s thrilling to see the open source community advancing solutions for global problems.”

Earlier that week (June 16–17), the UN Tech Over Hackathon drew over 200 global innovators to address SDG-aligned challenges through open source technology. The hackathon featured three distinct tracks:

The Maintain-a-Thon, organized in partnership with Alpha-Omega and the Sovereign Tech Agency, engaged over 40 participants across 15 breakout sessions. Senior maintainers offered guidance on issue triage, documentation improvements, and best practices for long-term project maintenance, reinforcing open source software’s foundational role in global digital infrastructure.

🔗 Read the official UN Tech Over press release

🔗 Read Arun Gupta’s blog post on “Ahead of the Storm”

UN Open Source Week 2025 underscored the importance of collaborative innovation in securing and sustaining digital public infrastructure. Aligned with its mission, OpenSSF remains dedicated to facilitating global cooperation, promoting secure-by-design best practices, providing educational resources, and supporting innovative technical initiatives. By empowering maintainers and contributors of all skill levels, OpenSSF aims to ensure open source software remains trusted, secure, and reliable for everyone.

Foundation furthers mission to enhance the security of open source software

DENVER – OpenSSF Community Day North America – June 26, 2025 – The Open Source Security Foundation (OpenSSF), a cross-industry initiative of the Linux Foundation that focuses on sustainably securing open source software (OSS), welcomes six new members from leading technology and security companies. New general members include balena, Buildkite, Canonical, Trace Machina, and Triam Security and associate members include Erlang Ecosystem Foundation (EEF). The Foundation also presents the Golden Egg Award during OpenSSF Community Day NA 2025.

“We are thrilled to welcome six new member companies and honor existing contributors during our annual North America Community Day event this week,” said Steve Fernandez, General Manager at OpenSSF. “As companies expand their global footprint and depend more and more on interconnected technologies, it is vital we work together to advance open source security at every layer – from code to systems to people. With the support of our new members, we can share best practices, push for standards and ensure security is front and center in all development.”

The OpenSSF continues to shine a light on those who go above and beyond in our community with the Golden Egg Awards. The Golden Egg symbolizes gratitude for awardees’ selfless dedication to securing open source projects through community engagement, engineering, innovation, and thoughtful leadership. This year, we celebrate:

Their efforts have made a lasting impact on the open source security ecosystem, and we are deeply grateful for their continued contributions.

OpenSSF is supported by more than 3,156 technical contributors across OpenSSF projects – providing a vendor-neutral partner to affiliated open source foundations and projects. Recent project updates include:

New and existing OpenSSF members are gathering this week in Denver at the annual OpenSSF Community Day NA 2025. Join the community at upcoming 2025 OpenSSF-hosted events, including OpenSSF Community Day India, OpenSSF Community Day Europe, OpenSSF Community Day Korea, and Open Source SecurityCon 2025.

“At balena, we understand that securing edge computing and IoT solutions is critical for all companies deploying connected devices. As developers focused on enabling reliable and secure operations with balenaCloud, we’re deeply committed to sharing our knowledge and expertise. We’re proud to join OpenSSF to contribute to open collaboration, believing that together we can build more mature security solutions that truly help companies protect their edge fleets and raise collective awareness across the open-source ecosystem.”

– Harald Fischer, Security Aspect Lead, balena

“Joining OpenSSF is a natural extension of Buildkite’s mission to empower teams with secure, scalable, and resilient software delivery. With Buildkite Package Registries, our customers get SLSA-compliant software provenance built in. There’s no complex setup or extra tooling required. We’ve done the heavy lifting so teams can securely publish trusted artifacts from Buildkite Pipelines with minimal effort. We’re excited to collaborate with the OpenSSF community to raise the bar for open source software supply chain security.”

– Ken Thompson, Vice President of Product Management, Buildkite

“Protecting the security of the open source ecosystem is not an easy feat, nor one that can be tackled by any single industry player. OpenSSF leads projects that are shaping this vast landscape. Canonical is proud to join OpenSSF on its mission to spearhead open source security across the entire market. For over 20 years we have delivered security-focused products and services across a broad spectrum of open source technologies. In today’s world, software security, reliability, and provenance is more important than ever. Together we will write the next chapter for open source security frameworks, processes and tools for the benefit of all users.”

– Luci Stanescu, Security Engineering Manager, Canonical

“Starting in 2024, the EEF’s Security WG focused community resources on improving our supply chain infrastructure and tooling to enable us to comply with present and upcoming cybersecurity laws and directives. This year we achieved OpenChain Certification (ISO/IEC 5230) for the core Erlang and Elixir libraries and tooling, and also became the default CVE Numbering Authority (CNA) for all open-source Erlang, Elixir and Gleam language packages. Joining the OpenSSF has been instrumental in connecting us to experts in the field and facilitating relationships with security practitioners in other open-source projects.”

– Alistair Woodman, Board Chair, Erlang Ecosystem Foundation

“Trace Machina is a technology company, founded in September 2023, that builds infrastructure software for developers to go faster. Our current core product, NativeLink, is a build caching and remote execution platform that speeds up compute-heavy work. As a company we believe both in building our products open source whenever possible, and in supporting the open source ecosystem and community. We believe open source software is a crucial philosophy in technology, especially in the security space. We’re thrilled to join the OpenSSF as a member organization and to continue being active in this wonderful community.”

– Tyrone Greenfield, Chief of Staff, Trace Machina

“Triam Security is proud to join the Open-Source Security Foundation to support its mission of strengthening the security posture of critical open source software. As container security vulnerabilities continue to pose significant risks to the software supply chain, our expertise in implementing SLSA Level 3/4 controls and building near-zero CVE solutions through CleanStart aligns perfectly with OpenSSF’s supply chain security initiatives. We look forward to collaborating with the community on advancing SLSA adoption, developing security best practices, improving vulnerability management processes, and promoting standards that enhance the security, transparency, and trust in the open-source ecosystem.”

– Biswajit De, CTO, Triam Security

About the OpenSSF

The Open Source Security Foundation (OpenSSF) is a cross-industry organization at the Linux Foundation that brings together the industry’s most important open source security initiatives and the individuals and companies that support them. The OpenSSF is committed to collaboration and working both upstream and with existing communities to advance open source security for all. For more information, please visit us at openssf.org.

Media Contact

Natasha Woods

The Linux Foundation

Welcome to the June 2025 edition of the OpenSSF Newsletter! Here’s a roundup of the latest developments, key events, and upcoming opportunities in the Open Source Security community.

The recent Tech Talk, “CRA-Ready: How to Prepare Your Open Source Project for EU Cybersecurity Regulations,” brought together open source leaders to explore the practical impact of the EU’s Cyber Resilience Act (CRA). With growing pressure on OSS developers, maintainers, and vendors to meet new security requirements, the session provided a clear, jargon-free overview of what CRA compliance involves.

The recent Tech Talk, “CRA-Ready: How to Prepare Your Open Source Project for EU Cybersecurity Regulations,” brought together open source leaders to explore the practical impact of the EU’s Cyber Resilience Act (CRA). With growing pressure on OSS developers, maintainers, and vendors to meet new security requirements, the session provided a clear, jargon-free overview of what CRA compliance involves.

Speakers included CRob (OpenSSF), Adrienn Lawson (Linux Foundation), Dave Russo (Red Hat), and David A. Wheeler (OpenSSF), who shared real-world examples of how organizations are preparing for the regulation, even with limited resources. The discussion also highlighted the LFEL1001 CRA course, designed to help OSS contributors move from confusion to clarity with actionable guidance.

Watch the session here.

The Open Source Technology Improvement Fund (OSTIF) addresses a critical gap in open source security by conducting tailored audits for high-impact OSS projects often maintained by small, under-resourced teams. Through its active role in OpenSSF initiatives and strategic partnerships, OSTIF delivers structured, effective security engagements that strengthen project resilience. By leveraging tools like the OpenSSF Scorecard and prioritizing context-specific approaches, OSTIF enhances audit outcomes and fosters a collaborative security community. Read the full case study to explore how OSTIF is scaling impact, overcoming funding hurdles, and shaping the future of OSS security.

✨GUAC 1.0 is Now Available

Discover how GUAC 1.0 transforms the way you manage SBOMs and secure your software supply chain. This first stable release of the “Graph for Understanding Artifact Composition” platform moves beyond isolated bills of materials to aggregate and enrich data from file systems, registries, and repositories into a powerful graph database. Instantly tap into vulnerability insights, license checks, end-of-life notifications, OpenSSF Scorecard metrics, and more. Read the blog to learn more.

✨Maintainers’ Guide: Securing CI/CD Pipelines After the tj-actions and reviewdog Supply Chain Attacks

CI/CD pipelines are now prime targets for supply chain attacks. Just look at the recent breaches of reviewdog and tj-actions, where chained compromises and log-based exfiltration let attackers harvest secrets without raising alarms. In this Maintainers’ Guide, Ashish Kurmi breaks down exactly how those exploits happened and offers a defense-in-depth blueprint from pinning actions to full commit SHAs and enforcing MFA, to monitoring for tag tampering and isolating sensitive secrets that every open source project needs today. Read the full blog to learn practical steps for locking down your workflows before attackers do.

✨From Sandbox to Incubating: gittuf’s Next Step in Open Source Security

gittuf, a platform-agnostic Git security framework, has officially progressed to the Incubating Project stage under the OpenSSF marking a major milestone in its development, community growth, and mission to strengthen the open source software supply chain. By adding cryptographic access controls, tamper-evident logging, and enforceable policies directly into Git repositories without requiring developers to abandon familiar workflows, gittuf secures version control at its core. Read the full post to see how this incubation will accelerate gittuf’s impact and how you can get involved.

✨Choosing an SBOM Generation Tool

With so many tools to build SBOMs, single-language tools like npm-sbom and CycloneDX’s language-specific generators or multi‐language options such as cdxgen, syft, and Tern, how do you know which one to pick? Nathan Naveen helps you decide by comparing each tool’s dependency analysis, ecosystem support, and CI/CD integration, and reminds us that “imperfect SBOMs are better than no SBOMs.” Read the blog to learn more.

✨OSS and the CRA: Am I a Manufacturer or a Steward?

The EU Cyber Resilience Act (CRA) introduces critical distinctions for those involved in open source software particularly between manufacturers and a newly defined role: open source software stewards. In this blog, Mike Bursell of OpenSSF breaks down what these terms mean, why most open source contributors won’t fall under either category, and how the CRA acknowledges the unique structure of open source ecosystems. If you’re wondering whether the CRA applies to your project or your role this post offers clear insights and guidance. Read the full blog to understand your position in the new regulatory landscape.

#33 – S2E10 “Bridging DevOps and Security: Tracy Ragan on the Future of Open Source”: In this episode of What’s in the SOSS, host CRob sits down with longtime open source leader and DevOps champion Tracy Ragan to trace her journey from the Eclipse Foundation to her work with Ortelius, the Continuous Delivery Foundation, and the OpenSSF. CRob and Tracy dig into the importance of configuration management, DevSecOps, and projects like the OpenSSF Scorecard and Ortelius in making software supply chains more transparent and secure, plus strategies to bridge the education gap between security professionals and DevOps engineers.

#32 – S2E09 “Yoda, Inclusive Strategies, and the Jedi Council: A Conversation with Dr. Eden-Reneé Hayes”: In this episode of What’s in the SOSS, host Yesenia Yser sits down with DEI strategist, social psychologist, and Star Wars superfan Dr. Eden-Reneé Hayes to discuss the myths around DEIA and why unlearning old beliefs is key to progress. Plus, stay for the rapid-fire questions and discover if Dr. Hayes is more Marvel or DC.

The Open Source Security Foundation (OpenSSF), together with Linux Foundation Education, provides a selection of free e-learning courses to help the open source community build stronger software security expertise. Learners can earn digital badges by completing offerings such as:

These are just a few of the many courses available for developers, managers, and decision-makers aiming to integrate security throughout the software development lifecycle.

Join us at OpenSSF Community Day Events in North America, India, Japan, Korea and Europe!

OpenSSF Community Days bring together security and open source experts to drive innovation in software security.

Connect with the OpenSSF Community at these key events:

Ways to Participate:

There are a number of ways for individuals and organizations to participate in OpenSSF. Learn more here.

You’re invited to…

We want to get you the information you most want to see in your inbox. Missed our previous newsletters? Read here!

Have ideas or suggestions for next month’s newsletter about the OpenSSF? Let us know at marketing@openssf.org, and see you next month!

Regards,

The OpenSSF Team

By Mihai Maruseac (Google), Eoin Wickens (HiddenLayer), Daniel Major (NVIDIA), Martin Sablotny (NVIDIA)

As AI adoption continues to accelerate, so does the need to secure the AI supply chain. Organizations want to be able to verify that the models they build, deploy, or consume are authentic, untampered, and compliant with internal policies and external regulations. From tampered models to poisoned datasets, the risks facing production AI systems are growing — and the industry is responding.

In collaboration with industry partners, the Open Source Security Foundation (OpenSSF)’s AI/ML Working Group recently delivered a model signing solution. Today, we are formalizing the signature format as OpenSSF Model Signing (OMS): a flexible and implementation-agnostic standard for model signing, purpose-built for the unique requirements of AI workflows.

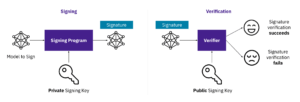

Model signing is a cryptographic process that creates a verifiable record of the origin and integrity of machine learning models. Recipients can verify that a model was published by the expected source, and has not subsequently been tampered with.

Signing AI artifacts is an essential step in building trust and accountability across the AI supply chain. For projects that depend on open source foundational models, project teams can verify the models they are building upon are the ones they trust. Organizations can trace the integrity of models — whether models are developed in-house, shared between teams, or deployed into production.

Key stakeholders that benefit from model signing:

Model signing uses cryptographic keys to ensure the integrity and authenticity of an AI model. A signing program uses a private key to generate a digital signature for the model. This signature can then be verified by anyone using the corresponding public key. These keys can be generated a-priori, obtained from signing certificates, or generated transparently during the Sigstore signing flow.If verification succeeds, the model is confirmed as untampered and authentic; if it fails, the model may have been altered or is untrusted.

Figure 1: Model Signing Diagram

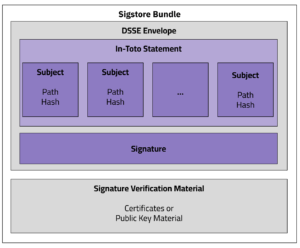

OMS is designed to handle the complexity of modern AI systems, supporting any type of model format and models of any size. Instead of treating each file independently, OMS uses a detached OMS Signature Format that can represent multiple related artifacts—such as model weights, configuration files, tokenizers, and datasets—in a single, verifiable unit.

The OMS Signature Format includes:

The OMS Signature File follows the Sigstore Bundle Format, ensuring maximum compatibility with existing Sigstore (a graduated OpenSSF project) ecosystem tooling. This detached format allows verification without modifying or repackaging the original content, making it easier to integrate into existing workflows and distribution systems.

OMS is PKI-agnostic, supporting a wide range of signing options, including:

This flexibility enables organizations to adopt OMS without changing their existing key management or trust models.

Figure 1. OMS Signature Format

As reference implementations to speed adoption, OMS offers both a command-line interface (CLI) for lightweight operational use and a Python library for deep integration into CI/CD pipelines, automated publishing flows, and model hubs. Other library integrations are planned.

Other examples, including signing using PKCS#11, can be found in the model-signing documentation.

This design enables better interoperability across tools and vendors, reduces manual steps in model validation, and helps establish a consistent trust foundation across the AI lifecycle.

The release of OMS marks a major step forward in securing the AI supply chain. By enabling organizations to verify the integrity, provenance, and trustworthiness of machine learning artifacts, OMS lays the foundation for safer, more transparent AI development and deployment.

Backed by broad industry collaboration and designed with real-world workflows in mind, OMS is ready for adoption today. Whether integrating model signing into CI/CD pipelines, enforcing provenance policies, or distributing models at scale, OMS provides the tools and flexibility to meet enterprise needs.

This is just the first step towards a future of secure AI supply chains. The OpenSSF AI/ML Working Group is engaging with the Coalition for Secure AI to incorporate other AI metadata into the OMS Signature Format, such as embedding rich metadata such as training data sources, model version, hardware used, and compliance attributes.

To get started, explore the OMS specification, try the CLI and library, and join the OpenSSF AI/ML Working Group to help shape the future of trusted AI.

Special thanks to the contributors driving this effort forward, including Laurent Simon, Rich Harang, and the many others at Google, HiddenLayer, NVIDIA, Red Hat, Intel, Meta, IBM, Microsoft, and in the Sigstore, Coalition for Secure AI, and OpenSSF communities.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.