By Mihai Maruseac, Google Open Source Security Team, OpenSSF AI/ML WG lead; Martin Sablotny, NVIDIA, model signing co-lead; Eoin Wickens, HiddenLayer, model signing co-lead; Daniel Major, NVIDIA, model signing co-lead

We are pleased to announce the launch of version 1.0 of the model-signing project, an OpenSSF project developed in the past year as part of the OpenSSF AI/ML working group. The aim of the project is to provide a library and CLI for signing and verification of ML models, supporting any type of model format and models of any size. Furthermore, the project supports several types of Private Key Infrastructure (PKI), such as signing with sigstore (a graduated OpenSSF project), self-signed certificates, or public/private key pairs while maintaining the same hashing scheme and signature format (as a sigstore bundle).

Signing ML artifacts is an essential step in ensuring the integrity of the ML supply chain. This is specifically important given that, in general, the team that trains a foundation model is not the same as the team that deploys the model into production, especially with mass-proliferation of pretrained models. For open source projects, models are stored in OSS model hubs, such as Kaggle and HuggingFace, but there is no indication that a model being uploaded there matches what was intended during training. Similarly, there is no means to trace the provenance of the model back to its creator.

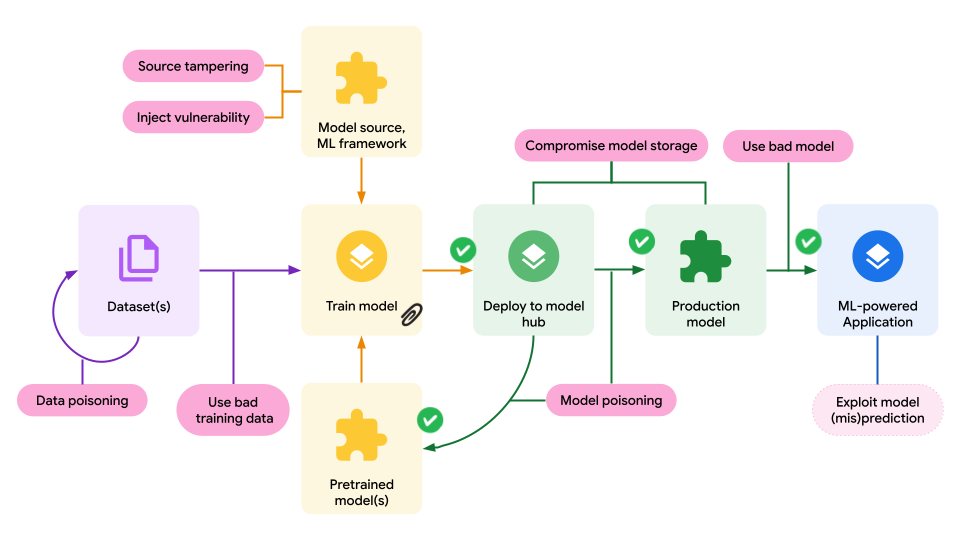

We can build a diagram similar to the one used by SLSA, but looking at the ML particularities: to build a model, we need datasets, model source, ML frameworks and optional pretrained models. Despite these differences, the supply risks are mostly the same:

The supply chain diagram for building a single model, illustrating some supply chain risks (oval labels) and where model signing can defend against them (check marks)

Preventing model supply chain compromises via model signatures involves signing the models during the training process and checking these signatures each time the model is used. This includes verification when the model gets uploaded to a model hub, when the model is selected to be deployed into an application, and when the model is used as input for another model.

This is just the first step towards a future of secure ML supply chains. As part of the OpenSSF AI/ML working group and the OpenSSF model signing meetings, we aim to build on top of model signatures: extend to dataset signatures and then incorporate other ML-related artifacts and metadata, as proposed under the Coalition for Secure AI.

Please join us via GitHub, Slack, or the mailing list if you are interested in working towards a secure AI future. We want to thank all the participants in the related working groups for helping us achieve this step of doing the first stable release.

About the Authors

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Daniel is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2022. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2022. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.