Foundation furthers mission to enhance the security of open source software

DENVER – OpenSSF Community Day North America – June 26, 2025 – The Open Source Security Foundation (OpenSSF), a cross-industry initiative of the Linux Foundation that focuses on sustainably securing open source software (OSS), welcomes six new members from leading technology and security companies. New general members include balena, Buildkite, Canonical, Trace Machina, and Triam Security and associate members include Erlang Ecosystem Foundation (EEF). The Foundation also presents the Golden Egg Award during OpenSSF Community Day NA 2025.

“We are thrilled to welcome six new member companies and honor existing contributors during our annual North America Community Day event this week,” said Steve Fernandez, General Manager at OpenSSF. “As companies expand their global footprint and depend more and more on interconnected technologies, it is vital we work together to advance open source security at every layer – from code to systems to people. With the support of our new members, we can share best practices, push for standards and ensure security is front and center in all development.”

Golden Egg Award Recipients

The OpenSSF continues to shine a light on those who go above and beyond in our community with the Golden Egg Awards. The Golden Egg symbolizes gratitude for awardees’ selfless dedication to securing open source projects through community engagement, engineering, innovation, and thoughtful leadership. This year, we celebrate:

- Ian Dunbar-Hall (Lockheed Martin) – for contributions to the bomctl and SBOMit projects

- Hayden Blauzvern (Google) – for leadership in the Sigstore project

- Marcela Melara (Intel) – for contributions to SLSA and leadership in the BEAR Working Group

- Yesenia Yser (Microsoft) – for work as a podcast co-host and leadership in the BEAR Working Group

- Zach Steindler (GitHub) – for leadership on the Technical Advisory Committee (TAC) and in the Securing Software Repositories Working Group

- Munehiro “Muuhh” Ikeda – for work as an LF Japan Evangelist and helping to put together OpenSSF Community Day Japan

- Adolfo “Puerco” Garcia Veytia – for support on Protobom, OpenVEX and Baseline projects

Their efforts have made a lasting impact on the open source security ecosystem, and we are deeply grateful for their continued contributions.

Project Updates

OpenSSF is supported by more than 3,156 technical contributors across OpenSSF projects – providing a vendor-neutral partner to affiliated open source foundations and projects. Recent project updates include:

- Gittuf, a platform-agnostic Git security system, has advanced to an incubating project under OpenSSF. This milestone marks the maturity and adoption of the project.

- OpenBao, a software solution to manage, store, and distribute sensitive data including secrets, certificates, and key, joined OpenSSF as a sandbox project

- Open Source Project Security Baseline (OSPS Baseline), which provides a structured set of security requirements aligned with international cybersecurity frameworks, standards, and regulations, aiming to bolster the security posture of open source software projects, was released.

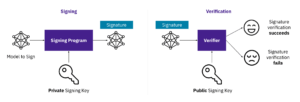

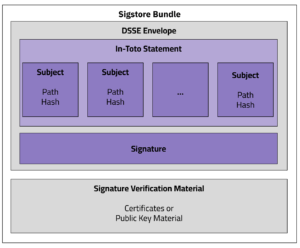

- Model Signing released version 1.0 to secure the machine learning supply chain.

- GUAC released version 1.0 to bring stability to the core functionality.

- SLSA released version 1.1 RC2 to enhance the clarity and usability of the original specification.

Events and Gatherings

New and existing OpenSSF members are gathering this week in Denver at the annual OpenSSF Community Day NA 2025. Join the community at upcoming 2025 OpenSSF-hosted events, including OpenSSF Community Day India, OpenSSF Community Day Europe, OpenSSF Community Day Korea, and Open Source SecurityCon 2025.

Additional Resources

- View the complete list of OpenSSF members

- Contribute efforts to one or more of the active OpenSSF working groups and projects

- Connect with OpenSSF at Blackhat USA 2025 and DEF CON 2025

Supporting Quotes

“At balena, we understand that securing edge computing and IoT solutions is critical for all companies deploying connected devices. As developers focused on enabling reliable and secure operations with balenaCloud, we’re deeply committed to sharing our knowledge and expertise. We’re proud to join OpenSSF to contribute to open collaboration, believing that together we can build more mature security solutions that truly help companies protect their edge fleets and raise collective awareness across the open-source ecosystem.”

– Harald Fischer, Security Aspect Lead, balena

“Joining OpenSSF is a natural extension of Buildkite’s mission to empower teams with secure, scalable, and resilient software delivery. With Buildkite Package Registries, our customers get SLSA-compliant software provenance built in. There’s no complex setup or extra tooling required. We’ve done the heavy lifting so teams can securely publish trusted artifacts from Buildkite Pipelines with minimal effort. We’re excited to collaborate with the OpenSSF community to raise the bar for open source software supply chain security.”

– Ken Thompson, Vice President of Product Management, Buildkite

“Protecting the security of the open source ecosystem is not an easy feat, nor one that can be tackled by any single industry player. OpenSSF leads projects that are shaping this vast landscape. Canonical is proud to join OpenSSF on its mission to spearhead open source security across the entire market. For over 20 years we have delivered security-focused products and services across a broad spectrum of open source technologies. In today’s world, software security, reliability, and provenance is more important than ever. Together we will write the next chapter for open source security frameworks, processes and tools for the benefit of all users.”

– Luci Stanescu, Security Engineering Manager, Canonical

“Starting in 2024, the EEF’s Security WG focused community resources on improving our supply chain infrastructure and tooling to enable us to comply with present and upcoming cybersecurity laws and directives. This year we achieved OpenChain Certification (ISO/IEC 5230) for the core Erlang and Elixir libraries and tooling, and also became the default CVE Numbering Authority (CNA) for all open-source Erlang, Elixir and Gleam language packages. Joining the OpenSSF has been instrumental in connecting us to experts in the field and facilitating relationships with security practitioners in other open-source projects.”

– Alistair Woodman, Board Chair, Erlang Ecosystem Foundation

“Trace Machina is a technology company, founded in September 2023, that builds infrastructure software for developers to go faster. Our current core product, NativeLink, is a build caching and remote execution platform that speeds up compute-heavy work. As a company we believe both in building our products open source whenever possible, and in supporting the open source ecosystem and community. We believe open source software is a crucial philosophy in technology, especially in the security space. We’re thrilled to join the OpenSSF as a member organization and to continue being active in this wonderful community.”

– Tyrone Greenfield, Chief of Staff, Trace Machina

“Triam Security is proud to join the Open-Source Security Foundation to support its mission of strengthening the security posture of critical open source software. As container security vulnerabilities continue to pose significant risks to the software supply chain, our expertise in implementing SLSA Level 3/4 controls and building near-zero CVE solutions through CleanStart aligns perfectly with OpenSSF’s supply chain security initiatives. We look forward to collaborating with the community on advancing SLSA adoption, developing security best practices, improving vulnerability management processes, and promoting standards that enhance the security, transparency, and trust in the open-source ecosystem.”

– Biswajit De, CTO, Triam Security

About the OpenSSF

The Open Source Security Foundation (OpenSSF) is a cross-industry organization at the Linux Foundation that brings together the industry’s most important open source security initiatives and the individuals and companies that support them. The OpenSSF is committed to collaboration and working both upstream and with existing communities to advance open source security for all. For more information, please visit us at openssf.org.

Media Contact

Natasha Woods

The Linux Foundation

The recent Tech Talk, “

The recent Tech Talk, “

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.