In this episode of “What’s in the SOSS,” we welcome back Sarah Evans, Distinguished Engineer at Dell Technologies and a key figure in the OpenSSF’s AI/ML Security working group. Sarah discusses the critical work being done to extend secure software development practices to the rapidly evolving field of AI. She dives into the AI Model Signing project, the groundbreaking MLOps whitepaper developed in partnership with Ericsson, and the crucial work of identifying and addressing new personas in AI/ML operations. Tune in to learn how OpenSSF is shaping the future of AI security and what challenges and opportunities lie ahead.

Summary

Conversation Highlights

0:00 Welcome and Introduction to Sarah Evans

0:48 Sarah Evans: Role at Dell Technologies and Involvement in OpenSSF

1:38 The OpenSSF AI/ML Working Group: Genesis and Goals

3:37 Deep Dive: The AI Model Signing Project with Sigstore

4:28 AI Model Signing: Benefits for Developers

5:20 Transition to the MLSeCOps White Paper

5:49 The Mission of the MLSecOps White Paper: Addressing Industry Gaps

7:00 Collaboration with Ericsson on the MLEC Ops White Paper

8:15 Identifying and Addressing New Personas in AI/ML Ops

10:04 The Power of Open Source in Extending Previous Work

10:15 Future Directions for OpenSSF’s AI/ML Strategy

11:21 OpenSSF’s Broader AI Security Focus

12:08 Sneak Peek: New Companion Video Podcast on AI Security

12:31 Sarah’s Personal Focus: The Year of the Agents (2025)

13:00 Security Concerns: Bringing Together Data Models and Code in AI Applications

14:00 Conclusion and Thanks

Episode Links

- Sarah Evans LinkedIn page

- OpenSSF AI/ML Security Working Group

- OpenSSF Blog: Visualizing Secure MLOps (MLSecOps): A Practical Guide for Building Robust AI/ML Pipeline Security

- OpenSSF Whitepaper: Visualizing Secure MLOps (MLSecOps): A Practical Guide for Building Robust AI/ML Pipeline Security

- Get involved with the OpenSSF

- Subscribe to the OpenSSF newsletter

- Follow the OpenSSF on LinkedIn

Transcript

0:00 Intro Music & Promo Clip: We have so much experience in applying secure software development to CI/CD and software, we can extend what we’ve learned to the data teams and to those AI/ML engineering teams because ultimately, I don’t think that we want a world where we have to do separate security governance across AI apps.

CRob:

0:20: Welcome, welcome, welcome to What’s in the SOSS, where we talk to interesting characters from around the open source security ecosystem, maintainers, engineers, thought leaders, contributors, and I just get to talk to a lot of really great people along the way. Today we have a friend of the show we’ve already had discussions with her in the past. I am so pleased and proud to introduce my friend Sarah Evans. Sarah, for our audience, could you maybe just tell them, remind them, you know who you are and what do you do and what you’ve been up to since our last talk.

Sarah Evans:

0:57: Well, thanks for having me here. I’m a distinguished engineer at Dell Technologies, and I have two roles. One is I do security applied research for my company looking at the future of security in our products and what innovation that we need to explore to improve the security by design. My second role is to activate my company to participate in OpenSSF, which I have thoroughly enjoyed getting to work with friends such as yourselves. I am very active and engaged in the AI/ML working group and trying to advocate for AI security.

CRob:

1:37: Awesome, yeah. And that actually brings us to our talk today. Our friends within your working group, the AI/ML working group, you’ve had a flurry of activity lately. I would love to talk about, you know, first off, let’s give the audience some context. Let’s talk about what is this group, and what’s kind of your some of your goals.

Sarah Evans:

1:58: Yeah, so the AI/ML working group really kind of came into fruition about a year and a half ago, I think, and we needed a space where we could talk about how the work that software developers were doing would change as they started to build applications that had AI in it. So were there things that we were doing today that could apply to the way the technology was changing?

One of the initial concerns is software secure software development we know a lot about that, but we may know less about AI. So is is a home for AI and OpenSSF appropriate? Should we be deeply partnering with some of the other foundations that are creating these data sets, creating the tools and models, and so we started the working group where our commitment to the tech was that we would deeply engage with the other groups around the ecosystem which we have. Done, but then we’ve also been looking for where are those places that are uniquely in the OpenSSF wheelhouse or swim lane of expertise on extending software security to AI applications, and I think that we’ve done a really good job of kind of exploring some of those places.

One of them has been with a white paper that we are partnering with another member in Ericsson to deliver, and that is something that we’re very proud of sharing with the community.

CRob:

3:28: Great, I’m really excited to talk about these projects because I for one welcome our robot overlords. Let’s first off start off – we had a big, you guys had a big announcement that really seems to have captured the imagination of the community. Let’s talk about the AI model signing project.

Sarah Evans:

3:47: Yes, so the model signing project, we worked that as a special interest group within our working group. We were approached by, some folks who are working in partnership with SigS store and. The idea was that if you can use Sigstore to sign code, could you extend Sigstore to sign a model and fill and close a gap that didn’t exist in the industry, and as you know, we were able to do that. There was a team of people that came together in the open source fashion to extend a tool to a new use case. And that’s just been very exciting to watch that evolve.

CRob

4:27: That’s awesome.

Sarah Evans:

4:28: So thinking about it from the developer perspective, I’m a developer working in the AI, how does this help me?

CRob

4:36: Right?

Sarah Evans:

4:36: So right now if you are pulling a model off of hugging face as an example, you don’t have any cryptographic digital signature on that model that that verifies it. The way you would with code. And so if that model has been signed with the SIS store components, then now you have the information that you would use to validate code. You can also follow some of those similar processes to validate a signed model.

CRob

5:07: Pretty cool.

Sara Evans

5:08: Yeah, it’s a really good use case for the supply chain security. And extending what we know about software to models and data that are part of our AI applications.

CRob

5:20: This seems to be kind of a theme for you taking classic ASA and applying it to the newer technologies. So let’s move on to the white paper. You and I have collaborated around some graphics for this, and then you’ve got a couple of folks you’re working with on the white paper. You’re shepherding through review and publication, and you should be able to read that now. So you know why do you think this talk, let’s talk about the white paper, you know, what’s it about? What’s it kind of the mission of it?

Sarah Evans

5:49: When the AI/ML working group first kicked off, I knew that we had seen this evolution of developing on open source software and processes called DevOps and then those evolved to DevSecOps over time. And so with the disruptive technology around AI/ML, I wanted to know what were the processes that a data scientist or an AI/ML engineer used and did they have the security governance they needed in their operational processes.

So I started to look at what is DataOps, what is MLops, what is LLMOps, like all the alphabet soup of ops all the ops. And I couldn’t find a lot of information online. And so I thought this is an industry gap that we have and we have so much experience in applying secure software development to CICD and software.

We can extend what we’ve learned to the data teams and to those AI/ML engineering teams because ultimately I don’t think that we want a world where we have to do separate security governance across AI apps that have these different operational pieces in them.

I was doing my research and I found a white paper by Ericsson on MLSecOps in the telco environment. Ericsson being a fellow member of OpenSSF, I, you know, worked through their OSPO and through some of the connections that we have in OpenSSF said, Hey, can you introduce me to those authors? I would love to see if we could up level that as a general resource to the community as an OpenSSF whitepaper. We were able to do that. They have been a fantastic partner in collaboration.

And so now we have for the industry an MLSecOps white paper reference architecture and some documentation about extending in two ways:

- One is if you’re a software developer now and you’re being asked to build an AI app, you have more information about what goes on in that MLOps environment.

- And if you are a person who’s creating an MLOps app and you haven’t had secure development training before, you now have a resource so it really serves kind of an existing member of our community and a new member of potential members of our community.

CRob

8:14: That’s really awesome. Congrats on that. Another area that we’ve collaborated on, the OpenSSF has a series of personas. We have 5 personas and that kind of organizes and drives our work. We have a Maintainer developer persona and OSPO persona and executive persona and so forth but one thing that you came to me that you realized early on as you were developing this white paper is there was a, there’s some gaps. Could you maybe talk about those gaps and what we’ve done to address them?

Sarah Evans

8:46: Yeah, where we found the gaps were in sub-personas so those main core personas that OpenSSF has been working with were, were just solid. We still have developers and maintainers, we still have security engineers…we still have folks working in our open source program offices, but the sub-personas were very software developer focused.

They really didn’t include some of the personas that we were seeing related to curating data sets, putting together end to end architectures, or, kind of putting together a pipeline for machine learning as a data engineer. So we, I worked based off of the language in that original Ericsson white paper that we have up leveled to an OSSF white paper to take those personas that work in that MLE op space and add them as sub personas within OpenSSF. So now we can all start to have the same language and understanding around who might be developing software applications, new members of our community that we want to be inclusive of and have language to understand how to reach them and partner with them.

CRob

10:04: I just love the power of open source where you find some previous work, you get value out of it, and then you expand it. Thank you so much for contributing that back.

Sarah Evans

10:13: Absolutely.

CRob

10:15: And where are you going from here? Where are the next steps around the white paper?

Sarah

10:19: I think we want to spend some time championing and then you know, meeting with our community we’ve discovered that potentially OpenSSF would like to have a broader AI/ML strategy or program and so really understanding how those strategic efforts will evolve and making sure that we can plug into those and provide resources that that strategically move OpenSSF forward into this new space those could include an MLSecOps document or maybe even a converged enterprise view of multiple ops but we’re also open to just looking at. Maybe some of the other areas that have been identified such as dealing with potentially AI slop or other concerns related to AI/ML.

I think there’s a really great opportunity for OpenSSF to look through our stack of tools and processes and understand how we can extend those to AI/ML use cases and applications.

I know that there is an opportunity to have a strategic program around AI and securing AI applications, and I’m really excited and looking forward to what the future of OpenSSF tools, processes, procedures, best practices look like so we can really support our software developers as they’re developing secure AI applications.

CRob

11:12: That’s awesome. I’m really looking forward to collaborating with you all and kind of championing and showcasing the work going forward. So thank you very much.

Let’s move along. We will be creating a new companion video podcast focused on this amazing community of AI security experts we have here within OpenSSF and within the broader community, and we’ll be talking about AI security news and topics. And I’m going to give this, take this opportunity to give the listeners a sneak peek of what we might be discussing very soon. So from your perspective, Sarah, you know, beyond these cool projects that you’re working on, what are you personally keeping an eye on in this fast moving AI space?

Sarah Evans

12:42: Well, I’ll tell you, 2025 is the year of the agents, and understanding the accelerated rate that agents that impact they will have on AI applications has been something I’ve been spending a lot of time on.

CRob

12:56: Pretty cool. I’m looking forward to learning more with everyone together. And from your perspective again, what’s keeping you up at night in regards to this crazy AI/ML, LLM, GenAI agentic, blah blah blah, machine space? What what are you concerned about from a security perspective?

Sarah Evans

I think for me from a security perspective bringing together data models and deploying it with code really puts an end to end AI application. It puts a lot of pressure on teams that may not have had to tightly work together before to begin to tightly work together. And so that’s why the personas and the and the converged operations and thinking about how do we apply what security we know to new areas is so important because we don’t have a moment to lose.

There’s such accelerated excitement around leveraging AI and leveraging agents that’s going to be very important for us to have a common way to talk to each other and to begin to solve problems and challenges so that we can innovate with this technology.

CRob

13:59: Excellent. Well, Sarah, I really appreciate your time come and talk to us about these amazing going on and kind of giving us a sneak peek into the future. And you know, I, I want to thank you again from behalf of the foundation, our community, and you know all the maintainers and enterprises that we serve. So thanks for showing up today.

Sarah Evans

14:17: Yeah, thanks, CRob.

CRob

14:18: Yeah, and that’s a wrap today. Thank you for listening to what’s in the SOSS. Have a great day and happy open sourcing.

Outro

14:29: Like what you’re hearing, be sure to subscribe to what’s in the SOSS on Spotify, Apple Podcasts, Antennapod, Pocketcast, or wherever you get your podcasts. There’s a lot going on with the OpenSSF and many ways to stay on top of it all. Check out the newsletter for open source news, upcoming events, and other happenings. Go to OpenSSF.org/newsletter to subscribe. Connect with us on LinkedIn for the most up-to-date OpenSSF news and insight, and be a part of the OpenSSF community at OpenSSF.org/getinvolved. Thanks for listening and we’ll talk to you next time on What’s in the SOSS.

By Mihai Maruseac (Google), Eoin Wickens (HiddenLayer), Daniel Major (NVIDIA), Martin Sablotny (NVIDIA)

As AI adoption continues to accelerate, so does the need to secure the AI supply chain. Organizations want to be able to verify that the models they build, deploy, or consume are authentic, untampered, and compliant with internal policies and external regulations. From tampered models to poisoned datasets, the risks facing production AI systems are growing — and the industry is responding.

In collaboration with industry partners, the Open Source Security Foundation (OpenSSF)’s AI/ML Working Group recently delivered a model signing solution. Today, we are formalizing the signature format as OpenSSF Model Signing (OMS): a flexible and implementation-agnostic standard for model signing, purpose-built for the unique requirements of AI workflows.

What is Model Signing

Model signing is a cryptographic process that creates a verifiable record of the origin and integrity of machine learning models. Recipients can verify that a model was published by the expected source, and has not subsequently been tampered with.

Signing AI artifacts is an essential step in building trust and accountability across the AI supply chain. For projects that depend on open source foundational models, project teams can verify the models they are building upon are the ones they trust. Organizations can trace the integrity of models — whether models are developed in-house, shared between teams, or deployed into production.

Key stakeholders that benefit from model signing:

- End users gain confidence that the models they are running are legitimate and unmodified.

- Compliance and governance teams benefit from traceable metadata that supports audits and regulatory reporting.

- Developers and MLOps teams are equipped to trace issues, improve incident response, and ensure reproducibility across experiments and deployments.

How does Model Signing Work

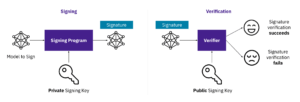

Model signing uses cryptographic keys to ensure the integrity and authenticity of an AI model. A signing program uses a private key to generate a digital signature for the model. This signature can then be verified by anyone using the corresponding public key. These keys can be generated a-priori, obtained from signing certificates, or generated transparently during the Sigstore signing flow.If verification succeeds, the model is confirmed as untampered and authentic; if it fails, the model may have been altered or is untrusted.

Figure 1: Model Signing Diagram

How Does OMS Work

OMS Signature Format

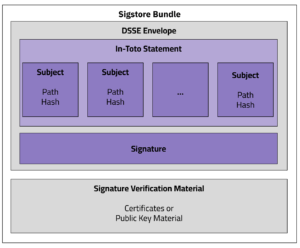

OMS is designed to handle the complexity of modern AI systems, supporting any type of model format and models of any size. Instead of treating each file independently, OMS uses a detached OMS Signature Format that can represent multiple related artifacts—such as model weights, configuration files, tokenizers, and datasets—in a single, verifiable unit.

The OMS Signature Format includes:

- A list of all files in the bundle, each referenced by its cryptographic hash (e.g., SHA256)

- An optional annotations section for custom, domain-specific fields (future support coming)

- A digital signature that covers the entire manifest, ensuring tamper-evidence

The OMS Signature File follows the Sigstore Bundle Format, ensuring maximum compatibility with existing Sigstore (a graduated OpenSSF project) ecosystem tooling. This detached format allows verification without modifying or repackaging the original content, making it easier to integrate into existing workflows and distribution systems.

OMS is PKI-agnostic, supporting a wide range of signing options, including:

- Private or enterprise PKI systems

- Self-signed certificates

- Bare keys

- Keyless signing with public or private Sigstore instances

This flexibility enables organizations to adopt OMS without changing their existing key management or trust models.

Figure 1. OMS Signature Format

Signing and Verifying with OMS

As reference implementations to speed adoption, OMS offers both a command-line interface (CLI) for lightweight operational use and a Python library for deep integration into CI/CD pipelines, automated publishing flows, and model hubs. Other library integrations are planned.

Signing and Verifying with Sigstore

Signing and Verifying with PKI Certificates

Other examples, including signing using PKCS#11, can be found in the model-signing documentation.

This design enables better interoperability across tools and vendors, reduces manual steps in model validation, and helps establish a consistent trust foundation across the AI lifecycle.

Looking Ahead

The release of OMS marks a major step forward in securing the AI supply chain. By enabling organizations to verify the integrity, provenance, and trustworthiness of machine learning artifacts, OMS lays the foundation for safer, more transparent AI development and deployment.

Backed by broad industry collaboration and designed with real-world workflows in mind, OMS is ready for adoption today. Whether integrating model signing into CI/CD pipelines, enforcing provenance policies, or distributing models at scale, OMS provides the tools and flexibility to meet enterprise needs.

This is just the first step towards a future of secure AI supply chains. The OpenSSF AI/ML Working Group is engaging with the Coalition for Secure AI to incorporate other AI metadata into the OMS Signature Format, such as embedding rich metadata such as training data sources, model version, hardware used, and compliance attributes.

To get started, explore the OMS specification, try the CLI and library, and join the OpenSSF AI/ML Working Group to help shape the future of trusted AI.

Special thanks to the contributors driving this effort forward, including Laurent Simon, Rich Harang, and the many others at Google, HiddenLayer, NVIDIA, Red Hat, Intel, Meta, IBM, Microsoft, and in the Sigstore, Coalition for Secure AI, and OpenSSF communities.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.