By Mihai Maruseac (Google), Eoin Wickens (HiddenLayer), Daniel Major (NVIDIA), Martin Sablotny (NVIDIA)

As AI adoption continues to accelerate, so does the need to secure the AI supply chain. Organizations want to be able to verify that the models they build, deploy, or consume are authentic, untampered, and compliant with internal policies and external regulations. From tampered models to poisoned datasets, the risks facing production AI systems are growing — and the industry is responding.

In collaboration with industry partners, the Open Source Security Foundation (OpenSSF)’s AI/ML Working Group recently delivered a model signing solution. Today, we are formalizing the signature format as OpenSSF Model Signing (OMS): a flexible and implementation-agnostic standard for model signing, purpose-built for the unique requirements of AI workflows.

What is Model Signing

Model signing is a cryptographic process that creates a verifiable record of the origin and integrity of machine learning models. Recipients can verify that a model was published by the expected source, and has not subsequently been tampered with.

Signing AI artifacts is an essential step in building trust and accountability across the AI supply chain. For projects that depend on open source foundational models, project teams can verify the models they are building upon are the ones they trust. Organizations can trace the integrity of models — whether models are developed in-house, shared between teams, or deployed into production.

Key stakeholders that benefit from model signing:

- End users gain confidence that the models they are running are legitimate and unmodified.

- Compliance and governance teams benefit from traceable metadata that supports audits and regulatory reporting.

- Developers and MLOps teams are equipped to trace issues, improve incident response, and ensure reproducibility across experiments and deployments.

How does Model Signing Work

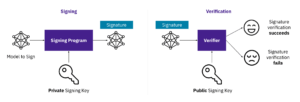

Model signing uses cryptographic keys to ensure the integrity and authenticity of an AI model. A signing program uses a private key to generate a digital signature for the model. This signature can then be verified by anyone using the corresponding public key. These keys can be generated a-priori, obtained from signing certificates, or generated transparently during the Sigstore signing flow.If verification succeeds, the model is confirmed as untampered and authentic; if it fails, the model may have been altered or is untrusted.

Figure 1: Model Signing Diagram

How Does OMS Work

OMS Signature Format

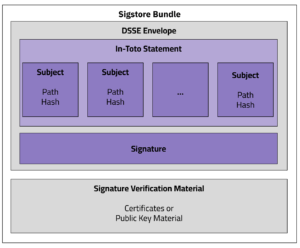

OMS is designed to handle the complexity of modern AI systems, supporting any type of model format and models of any size. Instead of treating each file independently, OMS uses a detached OMS Signature Format that can represent multiple related artifacts—such as model weights, configuration files, tokenizers, and datasets—in a single, verifiable unit.

The OMS Signature Format includes:

- A list of all files in the bundle, each referenced by its cryptographic hash (e.g., SHA256)

- An optional annotations section for custom, domain-specific fields (future support coming)

- A digital signature that covers the entire manifest, ensuring tamper-evidence

The OMS Signature File follows the Sigstore Bundle Format, ensuring maximum compatibility with existing Sigstore (a graduated OpenSSF project) ecosystem tooling. This detached format allows verification without modifying or repackaging the original content, making it easier to integrate into existing workflows and distribution systems.

OMS is PKI-agnostic, supporting a wide range of signing options, including:

- Private or enterprise PKI systems

- Self-signed certificates

- Bare keys

- Keyless signing with public or private Sigstore instances

This flexibility enables organizations to adopt OMS without changing their existing key management or trust models.

Figure 1. OMS Signature Format

Signing and Verifying with OMS

As reference implementations to speed adoption, OMS offers both a command-line interface (CLI) for lightweight operational use and a Python library for deep integration into CI/CD pipelines, automated publishing flows, and model hubs. Other library integrations are planned.

Signing and Verifying with Sigstore

Signing and Verifying with PKI Certificates

Other examples, including signing using PKCS#11, can be found in the model-signing documentation.

This design enables better interoperability across tools and vendors, reduces manual steps in model validation, and helps establish a consistent trust foundation across the AI lifecycle.

Looking Ahead

The release of OMS marks a major step forward in securing the AI supply chain. By enabling organizations to verify the integrity, provenance, and trustworthiness of machine learning artifacts, OMS lays the foundation for safer, more transparent AI development and deployment.

Backed by broad industry collaboration and designed with real-world workflows in mind, OMS is ready for adoption today. Whether integrating model signing into CI/CD pipelines, enforcing provenance policies, or distributing models at scale, OMS provides the tools and flexibility to meet enterprise needs.

This is just the first step towards a future of secure AI supply chains. The OpenSSF AI/ML Working Group is engaging with the Coalition for Secure AI to incorporate other AI metadata into the OMS Signature Format, such as embedding rich metadata such as training data sources, model version, hardware used, and compliance attributes.

To get started, explore the OMS specification, try the CLI and library, and join the OpenSSF AI/ML Working Group to help shape the future of trusted AI.

Special thanks to the contributors driving this effort forward, including Laurent Simon, Rich Harang, and the many others at Google, HiddenLayer, NVIDIA, Red Hat, Intel, Meta, IBM, Microsoft, and in the Sigstore, Coalition for Secure AI, and OpenSSF communities.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.