By Ben Cotton and Dejan Bosanac

The superpower of open source is multiple people working together on a common goal. That works for projects, too. GUAC and Trustify are two projects bringing visibility to the software supply chain. Today, they’re combining under the GUAC umbrella. With Red Hat’s contribution of Trustify to the GUAC project, the two combine to create a unified effort to address the challenges of consuming, processing, and utilizing supply chain security metadata at scale.

The Graph for Understanding Artifact Composition (GUAC) project was created to bring understanding to software supply chains. GUAC ingests software bills of materials (SBOMs) and enriches them with additional data to create a queryable graph of the software supply chain. Trustify also ingests and manages SBOMs, with a focus on security and compliance. With so much overlap, it makes sense to combine our efforts.

The grand vision for this evolved community is to become the central hub within OpenSSF for initiatives focused on building and using supply chain knowledge graphs. This includes: defining & promoting common standards, data models, & ontologies; developing shared infrastructure & libraries; improving the overall tooling ecosystem; fostering collaboration & knowledge sharing; and providing a clear & welcoming community for contributors.

Right now, we’re working on the basic logistics: migrating repositories, updating websites, merging documentation. We have created a new GUAC Steering Committee that oversees two core projects: Graph for Understanding Artifact Composition (GUAC) and Trustify, and subprojects like sw-id-core and GUAC Visualizer. These projects have their own maintainers, but we expect to see a lot of cross-collaboration as everyone gets settled in.

If you’d like to learn more, join Ben Cotton and Dejan Bosanac at OpenSSF Community Day Europe for their talk on Thursday 28 August. If you can’t make it to Amsterdam, the community page has all of the ways you can engage with our community.

Ben Cotton is the open source community lead at Kusari, where he contributes to GUAC and leads the OSPS Baseline SIG. He has over a decade of leadership experience in Fedora and other open source communities. His career has taken him through the public and private sector in roles that include desktop support, high-performance computing administration, marketing, and program management. Ben is the author of Program Management for Open Source Projects and has contributed to the book Human at a Distance and to articles in The Next Platform, Opensource.com, Scientific Computing, and more.

Ben Cotton is the open source community lead at Kusari, where he contributes to GUAC and leads the OSPS Baseline SIG. He has over a decade of leadership experience in Fedora and other open source communities. His career has taken him through the public and private sector in roles that include desktop support, high-performance computing administration, marketing, and program management. Ben is the author of Program Management for Open Source Projects and has contributed to the book Human at a Distance and to articles in The Next Platform, Opensource.com, Scientific Computing, and more.

Dejan Bosanac is a software engineer at Red Hat with an interest in open source and integrating systems. Over the years he’s been involved in various open source communities tackling problems like: Software supply chain security, IoT cloud platforms and Edge computing and Enterprise messaging.

Dejan Bosanac is a software engineer at Red Hat with an interest in open source and integrating systems. Over the years he’s been involved in various open source communities tackling problems like: Software supply chain security, IoT cloud platforms and Edge computing and Enterprise messaging.

By Sarah Evans and Andrey Shorov

The world of technology is constantly evolving, and with the rise of Artificial Intelligence (AI) and Machine Learning (ML), the demand for robust security measures has become more critical than ever. As organizations rush to deploy AI solutions, the gap between ML innovation and security practices has created unprecedented vulnerabilities we are only beginning to understand.

A new whitepaper, “Visualizing Secure MLOps (MLSecOps): A Practical Guide for Building Robust AI/ML Pipeline Security,” addresses this critical gap by providing a comprehensive framework for practitioners focused on building and securing machine learning pipelines.

Why this topic? Why now?

AI/ML systems encompass unique components, such as training datasets, models, and inference pipelines, that introduce novel weaknesses demanding dedicated attention throughout the ML lifecycle.

The evolving responsibilities within organizations have led to an intersection of expertise:

These trends have exposed a gap in security knowledge, leaving AI/ML pipelines susceptible to risks that neither discipline alone is fully equipped to manage. To resolve this, we investigated how we could adapt the principles of secure DevOps to secure MLOps by creating an MLSecOps framework that empowers both software developers and AI-focused professionals with the tools and processes needed for end-to-end ML pipeline security. During our research, we identified a scarcity of practical guidance on securing ML pipelines using open-source tools commonly employed by developers. This white paper aims to bridge that gap and provide a practical starting point.

This whitepaper is the result of a collaboration between Dell and Ericsson, leveraging our shared membership in the OpenSSF with the foundation stemming from a publication on MLSecOps for telecom environments authored by Ericsson researchers [https://www.ericsson.com/en/reports-and-papers/white-papers/mlsecops-protecting-the-ai-ml-lifecycle-in-telecom]. Together, we have expanded upon Ericsson’s original MLSecOps framework to create a comprehensive guide that addresses the needs of diverse industry sectors.

We are proud to share this guide as an industry resource that demonstrates how to apply open-source tools from secure DevOps to secure MLOps. It offers a progressive, visual learning experience where concepts are fundamentally and visually layered upon one another, extending security beyond traditional code-centric approaches. This guide integrates insights from CI/CD, the ML lifecycle, various personas, a sample reference architecture, mapped risks, security controls, and practical tools.

The document introduces a visual, “layer-by-layer” approach to help practitioners securely adopt ML, leveraging open-source tools from OpenSSF initiatives such as Supply-Chain Levels for Software Artifacts (SLSA), Sigstore, and OpenSSF Scorecard. It further explores opportunities to extend these tools to secure the AI/ML lifecycle using MLSecOps practices, while identifying specific gaps in current tooling and offering recommendations for future development.

For practitioners involved in the design, development, deployment, and operations as well as securing of AI/ML systems, this whitepaper provides a practical foundation for building robust and secure AI/ML pipelines and applications.

Ready to help shape the future of secure AI and ML?

Join the AI/ML Security Working Group

Sarah Evans delivers technical innovation for secure business outcomes through her role as the security research program lead in the Office of the CTO at Dell Technologies. She is an industry leader and advocate for extending secure operations and supply chain development principles in AI. Sarah also ensures the security research program explores the overlapping security impacts of emerging technologies in other research programs, such as quantum computing. Sarah leverages her extensive practical experience in security and IT, spanning small businesses, large enterprises (including the highly regulated financial services industry and a 21-year military career), and academia (computer information systems). She earned an MBA, an AIML professional certificate from MIT, and is a certified information security manager. Sarah is also a strategic and technical leader representing Dell in OpenSSF, a foundation for securing open source software.

Sarah Evans delivers technical innovation for secure business outcomes through her role as the security research program lead in the Office of the CTO at Dell Technologies. She is an industry leader and advocate for extending secure operations and supply chain development principles in AI. Sarah also ensures the security research program explores the overlapping security impacts of emerging technologies in other research programs, such as quantum computing. Sarah leverages her extensive practical experience in security and IT, spanning small businesses, large enterprises (including the highly regulated financial services industry and a 21-year military career), and academia (computer information systems). She earned an MBA, an AIML professional certificate from MIT, and is a certified information security manager. Sarah is also a strategic and technical leader representing Dell in OpenSSF, a foundation for securing open source software.

Andrey Shorov is a Senior Security Technology Specialist at Product Security, Ericsson. He is a cybersecurity expert with more than 16 years of experience across corporate and academic environments. Specializing in AI/ML and network security, Andrey advances AI-driven cybersecurity strategies, leading the development of cutting-edge security architectures and practices at Ericsson and contributing research that shapes industry standards. He holds a Ph.D. in Computer Science and maintains CISSP and Security+ certifications.

Andrey Shorov is a Senior Security Technology Specialist at Product Security, Ericsson. He is a cybersecurity expert with more than 16 years of experience across corporate and academic environments. Specializing in AI/ML and network security, Andrey advances AI-driven cybersecurity strategies, leading the development of cutting-edge security architectures and practices at Ericsson and contributing research that shapes industry standards. He holds a Ph.D. in Computer Science and maintains CISSP and Security+ certifications.

By Mihai Maruseac (Google), Eoin Wickens (HiddenLayer), Daniel Major (NVIDIA), Martin Sablotny (NVIDIA)

As AI adoption continues to accelerate, so does the need to secure the AI supply chain. Organizations want to be able to verify that the models they build, deploy, or consume are authentic, untampered, and compliant with internal policies and external regulations. From tampered models to poisoned datasets, the risks facing production AI systems are growing — and the industry is responding.

In collaboration with industry partners, the Open Source Security Foundation (OpenSSF)’s AI/ML Working Group recently delivered a model signing solution. Today, we are formalizing the signature format as OpenSSF Model Signing (OMS): a flexible and implementation-agnostic standard for model signing, purpose-built for the unique requirements of AI workflows.

Model signing is a cryptographic process that creates a verifiable record of the origin and integrity of machine learning models. Recipients can verify that a model was published by the expected source, and has not subsequently been tampered with.

Signing AI artifacts is an essential step in building trust and accountability across the AI supply chain. For projects that depend on open source foundational models, project teams can verify the models they are building upon are the ones they trust. Organizations can trace the integrity of models — whether models are developed in-house, shared between teams, or deployed into production.

Key stakeholders that benefit from model signing:

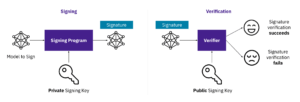

Model signing uses cryptographic keys to ensure the integrity and authenticity of an AI model. A signing program uses a private key to generate a digital signature for the model. This signature can then be verified by anyone using the corresponding public key. These keys can be generated a-priori, obtained from signing certificates, or generated transparently during the Sigstore signing flow.If verification succeeds, the model is confirmed as untampered and authentic; if it fails, the model may have been altered or is untrusted.

Figure 1: Model Signing Diagram

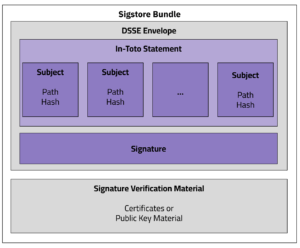

OMS is designed to handle the complexity of modern AI systems, supporting any type of model format and models of any size. Instead of treating each file independently, OMS uses a detached OMS Signature Format that can represent multiple related artifacts—such as model weights, configuration files, tokenizers, and datasets—in a single, verifiable unit.

The OMS Signature Format includes:

The OMS Signature File follows the Sigstore Bundle Format, ensuring maximum compatibility with existing Sigstore (a graduated OpenSSF project) ecosystem tooling. This detached format allows verification without modifying or repackaging the original content, making it easier to integrate into existing workflows and distribution systems.

OMS is PKI-agnostic, supporting a wide range of signing options, including:

This flexibility enables organizations to adopt OMS without changing their existing key management or trust models.

Figure 1. OMS Signature Format

As reference implementations to speed adoption, OMS offers both a command-line interface (CLI) for lightweight operational use and a Python library for deep integration into CI/CD pipelines, automated publishing flows, and model hubs. Other library integrations are planned.

Other examples, including signing using PKCS#11, can be found in the model-signing documentation.

This design enables better interoperability across tools and vendors, reduces manual steps in model validation, and helps establish a consistent trust foundation across the AI lifecycle.

The release of OMS marks a major step forward in securing the AI supply chain. By enabling organizations to verify the integrity, provenance, and trustworthiness of machine learning artifacts, OMS lays the foundation for safer, more transparent AI development and deployment.

Backed by broad industry collaboration and designed with real-world workflows in mind, OMS is ready for adoption today. Whether integrating model signing into CI/CD pipelines, enforcing provenance policies, or distributing models at scale, OMS provides the tools and flexibility to meet enterprise needs.

This is just the first step towards a future of secure AI supply chains. The OpenSSF AI/ML Working Group is engaging with the Coalition for Secure AI to incorporate other AI metadata into the OMS Signature Format, such as embedding rich metadata such as training data sources, model version, hardware used, and compliance attributes.

To get started, explore the OMS specification, try the CLI and library, and join the OpenSSF AI/ML Working Group to help shape the future of trusted AI.

Special thanks to the contributors driving this effort forward, including Laurent Simon, Rich Harang, and the many others at Google, HiddenLayer, NVIDIA, Red Hat, Intel, Meta, IBM, Microsoft, and in the Sigstore, Coalition for Secure AI, and OpenSSF communities.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Mihai Maruseac is a member of the Google Open Source Security Team (GOSST), working on Supply Chain Security for ML. He is a co-lead on a Secure AI Framework (SAIF) workstream from Google. Under OpenSSF, Mihai chairs the AI/ML working group and the model signing project. Mihai is also a GUAC maintainer. Before joining GOSST, Mihai created the TensorFlow Security team and prior to Google, he worked on adding Differential Privacy to Machine Learning algorithms. Mihai has a PhD in Differential Privacy from UMass Boston.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Eoin Wickens, Director of Threat Intelligence at HiddenLayer, specializes in AI security, threat research, and malware reverse engineering. He has authored numerous articles on AI security, co-authored a book on cyber threat intelligence, and spoken at conferences such as SANS AI Cybersecurity Summit, BSides SF, LABSCON, and 44CON, and delivered the 2024 ACM SCORED opening keynote.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Daniel Major is a Distinguished Security Architect at NVIDIA, where he provides security leadership in areas such as code signing, device PKI, ML deployments and mobile operating systems. Previously, as Principal Security Architect at BlackBerry, he played a key role in leading the mobile phone division’s transition from BlackBerry 10 OS to Android. When not working, Daniel can be found planning his next travel adventure.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.

Martin Sablotny is a security architect for AI/ML at NVIDIA working on identifying existing gaps in AI security and researching solutions. He received his Ph.D. in computing science from the University of Glasgow in 2023. Before joining NVIDIA, he worked as a security researcher in the German military and conducted research in using AI for security at Google.