By: Trevor Rosen

This post originally appeared on GitHub.blog and has been revised for OpenSSF.

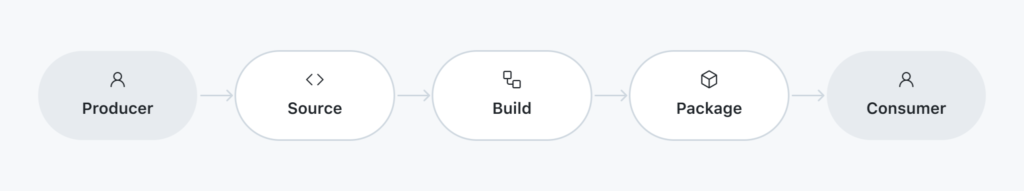

Software is a funny, profound thing: Each piece of it is an invisible machine, seemingly made of magic words, designed to run on the ultimate, universal machine. It’s not alive, but it has a lifecycle. It starts out as source code—just text files sitting in a repository somewhere—and then later (through some unique process), that source gets built into something else. A chunk of minified JavaScript delivered to a web server, a container image full of framework code and business logic, a raw binary compiled for a specific processor architecture. That final stage of metamorphosis, that something else that source code becomes, is what we usually refer to as a “software artifact,” and after, their creation artifacts tend to spend a good chunk of time at rest, waiting to be used. They do it in package registries (such as npm, RubyGems, PyPI, and MavenCentral) or in container registries (such as GitHub Packages, Azure Container Registry, and AWS ECR), as binaries attached to GitHub Releases, or just in a ZIP file sitting in blob storage somewhere.

Eventually, someone decides to pick up that artifact and use it. They unzip the package, execute the code, launch the container, install the driver, and update the firmware—no matter the modality, suddenly the built thing is running. This is the culmination of a production lifecycle that can take many human-hours, cost lots of money, and (given that the modern world runs on software) can be as high stakes as it gets. And yet, in so many cases, we don’t have a strong guarantee that the artifact that we run is most definitely the thing that we built. The details of that artifact’s journey are lost, or at best are hazy; it’s hard to connect the artifact back to the source code and build instructions from whence it came. This lack of visibility into the artifact’s lifecycle is the source of many of today’s most compelling security challenges. Throughout the SDLC, there are opportunities to secure the flow of code transforming into artifacts—doing so helps remove the risk that threat actors will poison finalized software and create havoc.

And yet, in so many cases, we don’t have a strong guarantee that the artifact that we run is most definitely the thing that we built. The details of that artifact’s journey are lost, or at best are hazy; it’s hard to connect the artifact back to the source code and build instructions from whence it came. This lack of visibility into the artifact’s lifecycle is the source of many of today’s most compelling security challenges. Throughout the SDLC, there are opportunities to secure the flow of code transforming into artifacts—doing so helps remove the risk that threat actors will poison finalized software and create havoc.

Some challenges in cybersecurity can feel almost impossible to successfully address, but this isn’t one of them. Let’s dig in with some background.

Digests and signatures

Say you have a file in your home directory, and you want to make sure that it’s exactly the same tomorrow as it is today. What do you do? A good way to start is to generate a digest of the file by running it through a secure hashing algorithm. Here’s how we can do that with OpenSSL, using the SHA-256 algorithm: Now, you’ve got a digest (also called a hash), a 64-character string of letters and numbers representing a unique fingerprint for that file. Change literally anything in that file and run the hash function again and you’ll get a different string. You can write down the digest somewhere and come back tomorrow and try the same process again. If you don’t get the same digest string both times, something in the file has changed.

Now, you’ve got a digest (also called a hash), a 64-character string of letters and numbers representing a unique fingerprint for that file. Change literally anything in that file and run the hash function again and you’ll get a different string. You can write down the digest somewhere and come back tomorrow and try the same process again. If you don’t get the same digest string both times, something in the file has changed.

OK, so far, so good—we can determine whether something has been tampered with. What if we want to make a statement about the artifact? What if we want to say, “I saw this artifact today, and I (a system or a person) am guaranteeing that this particular thing is definitely the thing I saw.” At that point, what you want is a software artifact signature; you want to take your digest string and run it through a cryptographic algorithm to produce another string representing the act of “signing” that fingerprint with a unique key. If you subsequently want someone else to be able to confirm your signature, you’ll want to use asymmetric encryption: Sign the digest with your private key and give out the corresponding public key so that anyone out there in the world who gets your file can verify it.

You probably already know that asymmetric encryption is the basis for almost all trust on the Internet. It’s how you can securely interact with your bank and how GitHub can securely deliver your repository contents. We use asymmetric encryption to power technologies to create trusted channels for communication, such as TLS and SSH, but we also use it to create a basis for trusting software via signatures.

Operating systems, such as Windows, macOS, iOS, and Android, all have mechanisms for ensuring a trusted origin for executable software artifacts by enforcing the presence of a signature. These systems are incredibly important components of the modern software world, and building them is fiendishly difficult.

Don’t just sign—attest

When thinking about how to expose more trustable information about a software artifact, a signature is a good start. It says, “some trusted system definitely saw this thing.” But if you want to truly offer an evolutionary leap in the security of the SDLC as a whole, you need to go beyond mere signatures and think in terms of attestations.

An attestation is an assertion of fact, a statement made about an artifact or artifacts and created by some entity that can be authenticated. It can be authenticated because the statement is signed and the key that did the signing can be trusted.

The most important and foundational kind of attestation is one that asserts facts about the origin and creation of the artifact—the source code it came from and the build instructions that transmuted that source into an artifact. We call this a provenance attestation.

The provenance attestation spec that we’ve chosen comes from the Supply-chain Levels for Software Artifacts (SLSA) project. SLSA is a project by Open Source Security Foundation (OpenSSF), and it is a great way to think about software supply chain security because it gives producers and consumers of software a common framework for reasoning about security guarantees and boundaries in a way that is agnostic of specific systems and tech stacks. SLSA offers a standardized schema for producing provenance attestations for software artifacts based on the work done by the in-toto project. in-toto is a Cloud Native Computing Foundation–graduated project that exists to (among other things) provide a collection of standardized metadata schemas for relevant information about your supply chain and build process.

What does it take to build something like this?

As the largest global software development platform that hosts a lot of code and builds pipelines, we’ve been thinking about this a lot. There are a number of moving parts that it would take to build an attestation service.

Doing so would mean having a way to:

- Issue certificates (essentially public keys bound to some authenticated identity)

- Make sure that those certificates can’t be misused

- Enable the secure signing of artifacts in a well-known context

- Verify those signatures in a way that the end user can trust

This means setting up a certificate authority (CA) and having some kind of client app you can use to authenticate the signatures associated with certs issued by that authority. In order to keep the certificates from being misused, you need to either 1) maintain certificate revocation lists or 2) ensure that the signing certificate is short lived, which means having a countersignature from some kind of timestamping authority (which can give an authoritative stamp that a cert was only used to produce a signature during the timeframe in which it was valid).

This is where Sigstore comes in. Sigstore, an OpenSSF project, offers both an X.509 CA and a timestamp authority based on RFC 3161. And it also lets you do identity with OIDC tokens, which many CI[PI1] systems already produce and associate with their workloads.

Sigstore does for software signatures what Let’s Encrypt has done for TLS certificates: make them simple, transparent, and easy to adopt. GitHub helps oversee the governance of the OpenSSF Sigstore project via our seat on the Technical Steering Committee, are maintainers of the server applications and multiple client libraries, and (along with folks from Chainguard, Google, RedHat, and Stacklok) form the operations team for the Sigstore Public Good Instance, which exists to support public attestations for open source software projects.

Sigstore requires a secure root of trust that complies with the standard laid out by The Update Framework (TUF). This allows clients to keep up with rotations in the CA’s underlying keys without needing to update their code. TUF exists to mitigate a large number of attack vectors that can come into play when working to update code in situ. It’s used by lots of projects for updating long-running telemetry agents in place, delivering secure firmware updates, and more.

With Sigstore in place, it’s possible to create a tamper-proof paper trail linking artifacts back to CI. This is really important because signing software and capturing details of provenance in a way that can’t be forged means that software consumers have the means to enforce their own rules regarding the origin of the code they’re executing, and we’re excited to share more with you on this in the coming days. Stay tuned!

About the Author

Trevor Rosen is the founder of the Package Security team at GitHub, focused on improving supply chain security throughout the SDLC. He has extensive experience in practical information security with a particular focus on CI/CD systems. A veteran of the SolarWinds attack and subsequent response, Trevor is a frequent speaker at supply chain security conferences and sits on the Technical Steering Committee for Sigstore.

Trevor Rosen is the founder of the Package Security team at GitHub, focused on improving supply chain security throughout the SDLC. He has extensive experience in practical information security with a particular focus on CI/CD systems. A veteran of the SolarWinds attack and subsequent response, Trevor is a frequent speaker at supply chain security conferences and sits on the Technical Steering Committee for Sigstore.